|

|

This Technote

describes the on-disk format for an HFS Plus volume. It does

not describe any programming interfaces for HFS Plus

volumes.

This technote is directed at developers who need to work

with HFS Plus at a very low level, below the abstraction

provided by the File Manager programming interface. This

includes developers of disk recovery utilities and

programmers implementing HFS Plus support on other

platforms.

This technote assumes that you have a conceptual

understanding of the HFS volume format, as described in

Inside

Macintosh: Files.

[Mar 05, 2004]

|

HFS Plus Basics

HFS Plus is a volume format for Mac OS. HFS

Plus was introduced with Mac

OS 8.1. HFS Plus is architecturally very similar to

HFS, although there have been a number of changes. The

following table summarizes the important differences.

Table 1 HFS and HFS Plus Compared

|

Feature

|

HFS

|

HFS Plus

|

Benefit/Comment

|

|

User visible name

|

Mac OS Standard

|

Mac OS Extended

|

|

|

Number of allocation blocks

|

16 bits worth

|

32 bits worth

|

Radical decrease in disk space used on large

volumes, and a larger number of files per volume.

|

|

Long file names

|

31 characters

|

255 characters

|

Obvious user benefit; also improves

cross-platform compatibility

|

|

File name encoding

|

MacRoman

|

Unicode

|

Allows for international-friendly file names,

including mixed script names

|

|

File/folder attributes

|

Support for fixed size attributes (FileInfo and

ExtendedFileInfo)

|

Allows for future meta-data extensions

|

Future systems may use metadata for a richer

Finder experience

|

|

OS startup support

|

System Folder ID

|

Also supports a dedicated startup file

|

May help non-Mac OS systems to boot from HFS

Plus volumes

|

|

catalog node size

|

512 bytes

|

4 KB

|

Maintains efficiency in the face of the other

changes. (This larger catalog node size is due to

the much longer file names [512 bytes as opposed to

32 bytes], and larger catalog records (because of

more/larger fields)).

|

|

Maximum file size

|

231 bytes

|

263 bytes

|

Obvious user benefit, especially for multimedia

content creators. |

The extent to which these HFS Plus features are available

through a programming interface is OS dependent. Mac OS versions

less than 9.0 do not provide programming interfaces for any

HFS Plus-specific features.

To summarize, the key goals that guided the design of the

HFS Plus volume format were:

- efficient use of disk space,

- international-friendly file names,

- future support for named forks, and

- ease booting on non-Mac OS operating systems.

The following sections describes these goals, and the

differences between HFS and HFS Plus required to meet these

goals.

Efficient Use of Disk Space

HFS divides the total space on a volume into equal-sized

pieces called allocation blocks. It uses 16-bit fields to

identify a particular allocation block, so there must be

less than 216 (65,536) allocation blocks on an

HFS volume. The size of an allocation block is typically the

smallest multiple of 512 such that there are less than

65,536 allocation blocks on the volume (i.e., the volume

size divided by 65,535, rounded up to a multiple of 512).

Any non-empty fork must occupy an integral number of

allocation blocks. This means that the amount of space

occupied by a fork is rounded up to a multiple of the

allocation block size. As volumes (and therefore allocation

blocks) get bigger, the amount of allocated but unused space

increases.

HFS Plus uses 32-bit values to identify allocation

blocks. This allows up to 2 32 (4,294,967,296)

allocation blocks on a volume. More allocation blocks means

a smaller allocation block size, especially on volumes of 1

GB or larger, which in turn means less average wasted space

(the fraction of an allocation block at the end of a fork,

where the entire allocation block is not actually used). It

also means you can have more files, since the available

space can be more finely distributed among a larger number

of files. This change is especially beneficial if the volume

contains a large number of small files.

International-Friendly File Names

HFS uses 31-byte strings to store file names. HFS does

not store any kind of script information with the file name

to indicate how it should be interpreted. File names are

compared and sorted using a routine that assumes a Roman

script, wreaking havoc for names that use some other script

(such as Japanese). Worse, this algorithm is buggy, even for

Roman scripts. The Finder and other applications interpret

the file name based on the script system in use at runtime.

|

Note:

The problem with using non-Roman scripts in an HFS

file name is that HFS compares file names in a case-

insensitive fashion. The case-insensitive

comparison algorithm assume a MacRoman encoding.

When presented with non-Roman text, this algorithm

fails in strange ways. The upshot is that HFS

decides that certain non-Roman file names are

duplicates of other file names, even though they

are not duplicates in the source encoding.

|

HFS Plus uses up to 255 Unicode characters to store file

names. Allowing up to 255 characters makes it easier to have

very descriptive names. Long names are especially useful

when the name is computer-generated (such as Java class

names).

The HFS catalog B-tree uses 512-byte nodes. An HFS Plus

file name can occupy up to 512 bytes (including the length

field). Since a B-tree index node must store at least two

keys (plus pointers and node descriptor), the HFS Plus

catalog must use a larger node size. The typical node size

for an HFS Plus catalog B-tree is 4 KB.

In the HFS catalog B-tree, the keys stored in an index

node always occupy a fixed amount of space, the maximum key

size. In HFS Plus, the keys in an index node may occupy a

variable amount of space determined by the actual size of

the key. This allows for less wasted space in index nodes

and creates, on typical disks, a substantially larger

branching factor in the tree (requiring fewer node accesses

to find any given record).

Future Support for Named Forks

Files on an HFS volume have two forks: a data fork and a

resource fork, either of which may be empty (zero length).

Files and directories also contain a small amount of

additional information (known as catalog information or

metadata) such as the modification date or Finder info.

Apple software teams and third-party developers often

need to store information associated with particular files

and directories. In some cases (for example, custom icons for

files), the data or resource fork is appropriate. But in

other cases (for example, custom icons for directories, or File

Sharing access privileges), using the data or resource fork

is not appropriate or not possible.

A number of products have implemented special-purpose

solutions for storing their file- and directory-related

data. But because these are not managed by the file system,

they can become inconsistent with the file and directory

structure.

HFS Plus has an attribute file, another B-tree, that can

be used to store additional information for a file or

directory. Since it is part of the volume format, this

information can be kept with the file or directory as is it

moved or renamed, and can be deleted when the file or

directory is deleted. The contents of the attribute file's

records have not been fully defined yet, but the goal is to

provide an arbitrary number of forks, identified by Unicode

names, for any file or directory.

|

Note:

Because the attributes file has not been fully

defined yet, current implementations are unable to

delete named forks when a file or directory is

deleted. Future implementations that properly

delete named forks will need to check for these

orphaned named forks and delete them when the

volume is mounted. The

lastMountedVersion field of the volume

header can be used to detect when such a check

needs to take place.

Whenever possible, an application should delete

named forks rather than orphan them.

|

Easy Startup of Alternative Operating Systems

HFS Plus defines a special startup file,

an unstructured fork that can be found easily during system

startup. The location and size of the startup file is

described in the volume header.

The startup file is especially useful on systems that don't

have HFS or HFS Plus support in ROM. In many respects, the

startup file is a generalization of the HFS boot blocks, one

that provides a much larger, variable-sized amount of

storage.

Back to top

Core Concepts

HFS Plus uses a number of interrelated structures to

manage the organization of data on the volume. These

structures include:

Each of these complex structures is described in its own

section. The purpose of this section is to give an overview

of the volume format, describe how the structures fit

together, and define the primitive data types used by HFS

Plus.

Terminology

HFS Plus is a specification of how a volume (files

that contain user data, along with the structure to retrieve

that data) exists on a disk (the medium on which user

data is stored). The storage space on a disk is divided into

units called sectors. A sector is the smallest

part of a disk that the disk's driver software will read or write

in a single operation (without having to read or write additional

data before or after the requested data). The size of a sector is

usually based on the way the data is physically laid out on the disk.

For hard disks, sectors are typically 512 bytes. For optical media,

sectors are typically 2048 bytes.

Most of the data structures on an HFS Plus volume do not

depend on the size of a sector, with the exception of the

journal. Because the journal does rely

on accessing individual sectors, the sector size is stored

in the jhdr_size field of the

journal header (if the

volume has a journal).

HFS Plus allocates space in units called allocation

blocks; an allocation block is simply a group of

consecutive bytes. The size (in bytes) of an allocation

block is a power of two, greater than or equal to 512, which

is set when the volume is initialized. This value cannot be

easily changed without reinitializing the volume. Allocation

blocks are identified by a 32-bit allocation block

number, so there can be at most 232

allocation blocks on a volume. Current implementations of the

file system are optimized for 4K allocation blocks.

|

Note:

For the best performance, the allocation block size should

be a multiple of the sector size. If the

volume has an HFS wrapper, the

wrapper's allocation block size and allocation block start

should also be multiples of the sector size to

allow the best performance.

|

All of the volume's structures, including the volume

header, are part of one or more allocation blocks (with the possible

exception of the alternate volume header, discussed

below). This differs from HFS,

which has several structures (including the boot blocks, master

directory block, and bitmap) which are not part of any

allocation block.

To promote file contiguity and avoid

fragmentation, disk space is typically allocated to files in

groups of allocation blocks, or clumps. The clump size

is always a multiple of the allocation block size. The default

clump size is specified in the volume header.

|

IMPORTANT:

The actual algorithm used to extend files is not part

of this specification. The implementation is not

required to act on the clump values in the volume

header; it merely provides space to store those

values.

|

|

Note:

The current non-contiguous algorithm in Mac OS will

begin allocating at the next free block it finds.

It will extend its allocation up to a multiple of

the clump size if there is sufficient free space

contiguous with the end of the requested

allocation. Space is not allocated in contiguous

clump-sized pieces.

|

Every HFS Plus volume must have a volume header.

The volume header contains sundry information about the

volume, such as the date and time of the volume's creation

and the number of files on the volume, as well as the

location of the other key structures on the volume. The

volume header is always located at 1024 bytes from the

start of the volume.

A copy of the volume header, known as the alternate

volume header, is stored starting at 1024 bytes before

the end of the volume. The first 1024 bytes of volume

(before the volume header), and the last 512 bytes of the

volume (after the alternate volume header) are

reserved.

All of the allocation blocks containing the volume header,

alternate volume header, or the reserved areas before the

volume header or after the alternate volume header, are marked

as used in the allocation file.

The actual number of allocation blocks marked this way depends

on the allocation block size.

An HFS Plus volume contains five special files,

which store the file system structures required to access

the file system payload: folders, user files, and

attributes. The special files are the catalog file, the

extents overflow file, the allocation file, the attributes

file and the startup file. Special files only have a single

fork (the data fork) and the extents of that fork are

described in the volume header.

The catalog file is a special file that describes

the folder and file hierarchy on a volume. The catalog file

contains vital information about all the files and folders

on a volume, as well as the catalog information, for

the files and folders that are stored in the catalog file.

The catalog file is organized as a B-tree (or "balanced

tree") to allow quick and efficient searches through a large

folder hierarchy.

The catalog file stores the file and folder names, which

consist of up to 255 Unicode characters, as described

below.

|

Note:

The B-Trees section contains

an in-depth description of the B-trees used by HFS

Plus. |

The attributes file is another special file which

contains additional data for a file or folder. Like the

catalog file, the attributes file is organized as a B-tree.

In the future, it will be used to store information about

additional forks. (This is similar to the way the catalog

file stores information about the data and resource forks of

a file.)

HFS Plus tracks which allocation blocks belong to a fork

by maintaining a list of the fork's extents. An

extent is a contiguous range of allocation blocks

allocated to some fork, represented by a pair of numbers:

the first allocation block number and the number of

allocation blocks. For a user file, the first eight extents

of each fork are stored in the volume's catalog file. Any

additional extents are stored in the extents overflow

file, which is also organized as a B-tree.

The extents overflow file also stores additional extents

for the special files except for the extents overflow file

itself. However, if the startup file requires more than the

eight extents in the Volume Header (and thus requires

additional extents in the extents overflow file), it would

be much harder to access, and defeat the purpose of the

startup file. So, in practice, a startup file should be

allocated such that it doesn't need additional extents in

the extents overflow file.

The allocation file is a special file which

specifies whether an allocation block is used or free. This

performs the same role as the HFS volume bitmap, although

making it a file adds flexibility to the volume format.

The startup file is another special file which

facilitates booting of non-Mac OS computers from HFS Plus

volumes.

Finally, the bad block file prevents the volume

from using certain allocation blocks because the portion of

the media that stores those blocks is defective. The bad

block file is neither a special file nor a user file; this

is merely convention used in the extents overflow file. See

Bad Block File for more details.

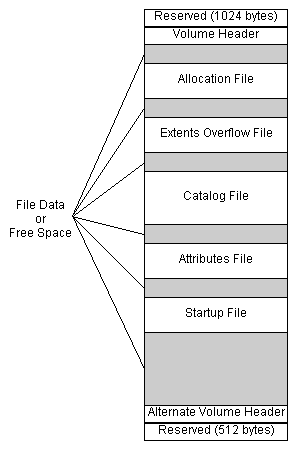

Broad Structure

The bulk of an HFS Plus volume consists of seven types of

information or areas:

- user file forks,

- the allocation file (bitmap),

- the catalog file,

- the extents overflow file,

- the attributes file,

- the startup file, and

- unused space.

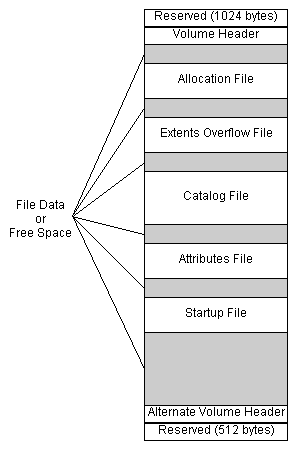

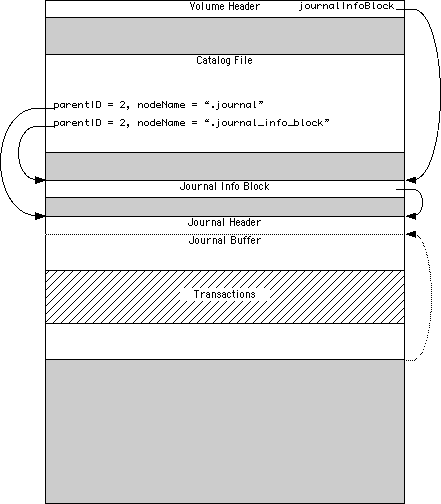

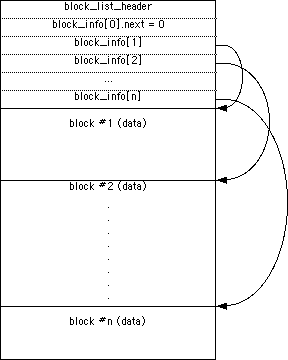

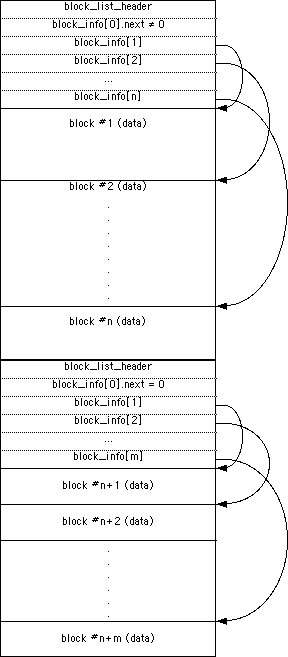

The general structure of an HFS Plus volume is

illustrated in Figure 1.

Figure 1. Organization of an HFS Plus

Volumes.

The volume header is always at a fixed

location (1024 bytes from the start of the volume).

However, the special files can appear anywhere between

the volume header block and the alternate volume header

block. These files can appear in any order and are not

necessarily contiguous.

The information on HFS Plus volumes (with the possible

exception of the alternate volume header, as discussed

below) is organized solely

in allocation blocks. Allocation blocks are simply a means

of grouping space on the media into convenient parcels.

The size of an allocation block is a power of two,

and at least 512. The allocation block size is a volume

header parameter whose value is set when the volume is

initialized; it cannot be changed easily without

reinitializing the volume.

|

Note:

The allocation block size is a classic

speed-versus- space tradeoff. Increasing the

allocation block size decreases the size of the

allocation file, and often reduces the number of

separate extents that must be manipulated for every

file. It also tends to increase the average size of

a disk I/O, which decreases overhead. Decreasing

the allocation block size reduces the average

number of wasted bytes per file, making more

efficient use of the volume's space.

|

|

WARNING:

While HFS Plus disks with an allocation block size

smaller than 4 KB are legal, DTS recommends that

you always use a minimum 4 KB allocation block

size. Disks with a smaller allocation block size

will be markedly slower when used on systems that

do 4 KB clustered I/O, such as Mac OS X Server.

|

Primitive Data Types

This section describes the primitive data types used on

an HFS Plus volume. All data structures in this volume are

defined in the C language. The specification assumes that

the compiler will not insert any padding fields. Any

necessary padding fields are explicitly declared.

|

IMPORTANT:

The HFS Plus volume format is largely derived from

the HFS volume format. When defining the new

format, it was decided to remove unused fields

(primarily legacy MFS fields) and arrange all the

remaining fields so that similar fields were

grouped together and that all fields had proper

alignment (using PowerPC alignment rules).

|

Reserved and Pad

Fields

In many places this specification describes a field, or

bit within a field, as reserved. This has a definite

meaning, namely:

- When creating a structure with a reserved field, an

implementation must set the field to zero.

- When reading existing structures, an implementation

must ignore any value in the field.

- When modifying a structure with a reserved field, an

implementation must preserve the value of the reserved

field.

This definition allows for backward-compatible

enhancements to the volume format.

Pad fields have exactly the same semantics as a reserved

field. The different name merely reflects the designer's

goals when including the field, not the behavior of the

implementation.

Integer Types

All integer values are defined by one of the following

primitive types: UInt8, SInt8,

UInt16, SInt16,

UInt32, SInt32,

UInt64, and SInt64. These

represent unsigned and signed (2's complement) 8-bit,

16-bit, 32-bit, and 64-bit numbers.

All multi-byte integer values are stored in big-endian

format. That is, the bytes are stored in order from most

significant byte through least significant byte, in

consecutive bytes, at increasing offset from the start of a

block. Bits are numbered from 0 to n-1 (for types

UIntn and SIntn),

with bit 0 being the least significant bit.

HFS Plus Names

File and folder names on HFS Plus consist of up to 255

Unicode characters with a preceding 16-bit length, defined

by the type HFSUniStr255.

struct HFSUniStr255 {

UInt16 length;

UniChar unicode[255];

};

typedef struct HFSUniStr255 HFSUniStr255;

typedef const HFSUniStr255 *ConstHFSUniStr255Param;

|

UniChar is a UInt16 that

represents a character as defined in the Unicode character

set defined by The Unicode Standard, Version 2.0

[Unicode, Inc. ISBN 0-201-48345-9].

HFS Plus stores strings fully decomposed and in canonical

order. HFS Plus compares strings in a case-insensitive

fashion. Strings may contain Unicode characters that must

be ignored by this comparison. For more details on these

subtleties, see Unicode

Subtleties.

A variant of HFS Plus, called HFSX,

allows volumes whose names are compared in a case-sensitive

fashion. The names are fully decomposed and in canonical order,

but no Unicode characters are ignored during the comparison.

Text Encodings

Traditional Mac OS programming interfaces pass filenames as

Pascal strings (either as a StringPtr or as a

Str63 embedded in an FSSpec). The

characters in those strings are not Unicode; the encoding

varies depending on how the system software was localized

and what language kits are installed. Identical sequences of

bytes can represent vastly different Unicode character

sequences. Similarly, many Unicode characters belong to more

than one Mac OS text encoding.

HFS Plus includes two features specifically designed to

help Mac OS handle the conversion between Mac OS-encoded

Pascal strings and Unicode. The first feature is the

textEncoding field of the file and folder

catalog records. This field is defined as a hint to be used

when converting the record's Unicode name back to a Mac OS-

encoded Pascal string.

The valid values for the textEncoding field

are defined in Table 2.

Table 2 Text Encodings

|

Encoding Name

|

Value

|

Encoding Name

|

Value

|

|

MacRoman

|

0

|

MacThai

|

21

|

|

MacJapanese

|

1

|

MacLaotian

|

22

|

|

MacChineseTrad

|

2

|

MacGeorgian

|

23

|

|

MacKorean

|

3

|

MacArmenian

|

24

|

|

MacArabic

|

4

|

MacChineseSimp

|

25

|

|

MacHebrew

|

5

|

MacTibetan

|

26

|

|

MacGreek

|

6

|

MacMongolian

|

27

|

|

MacCyrillic

|

7

|

MacEthiopic

|

28

|

|

MacDevanagari

|

9

|

MacCentralEurRoman

|

29

|

|

MacGurmukhi

|

10

|

MacVietnamese

|

30

|

|

MacGujarati

|

11

|

MacExtArabic

|

31

|

|

MacOriya

|

12

|

MacSymbol

|

33

|

|

MacBengali

|

13

|

MacDingbats

|

34

|

|

MacTamil

|

14

|

MacTurkish

|

35

|

|

MacTelugu

|

15

|

MacCroatian

|

36

|

|

MacKannada

|

16

|

MacIcelandic

|

37

|

|

MacMalayalam

|

17

|

MacRomanian

|

38

|

|

MacSinhalese

|

18

|

MacFarsi

|

140 (49)

|

|

MacBurmese

|

19

|

MacUkrainian

|

152 (48)

|

|

MacKhmer

|

20

|

|

|

|

IMPORTANT:

Non-Mac OS implementations of HFS Plus may choose

to simply ignore the textEncoding

field. In this case, the field must be treated as

a reserved

field.

|

|

Note:

Mac OS uses the textEncoding field in

the following way. When a file or folder is created

or renamed, Mac OS converts the supplied Pascal

string to a HFSUniStr255. It stores

the source text encoding in the

textEncoding field of the catalog

record. When Mac OS needs to create a Pascal string

for that record, it uses the

textEncoding as a hint to the text

conversion process. This hint ensures a high-degree

of round-trip conversion fidelity, which in turn

improves compatibility.

|

The second use of text encodings in HFS Plus is the

encodingsBitmap field of the volume header. For

each encoding used by a catalog node on the volume, the

corresponding bit in the encodingsBitmap field

must be set.

It is acceptable for a bit in this bitmap to be set even

though no names on the volume use that encoding. This means

that when an implementation deletes or renames an object, it

does not have to clear the encoding bit if that was the last

name to use the given encoding.

|

IMPORTANT:

The text encoding value is used as the number of

the bit to set in encodingsBitmap to

indicate that the encoding is used on the volume.

However, encodingsBitmap is only 64

bits long, and thus the text encoding values for

MacFarsi and MacUkrainian cannot be used as bit

numbers. Instead, another bit number (shown in

parenthesis) is used.

|

|

Note:

Mac OS uses the encodingsBitmap field

to determine which text encoding conversion tables

to load when the volume is mounted. Text encoding

conversion tables are large, and loading them

unnecessarily is a waste of memory. Most systems

only use one text encoding, so there is a

substantial benefit to recording which encodings

are required on a volume-by-volume basis.

|

|

WARNING:

Non-Mac OS implementations of HFS Plus must

correctly maintain the encodingsBitmap

field. Specifically, if the implementation sets the

textEncoding field a catalog record to

a text-encoding value, it must ensure that the

corresponding bit is set in

encodingsBitmap to ensure correct

operation when that disk is mounted on a system

running Mac OS.

|

HFS Plus Dates

HFS Plus stores dates in several data structures,

including the volume header and catalog records. These dates

are stored in unsigned 32-bit integers (UInt32)

containing the number of seconds since midnight, January 1,

1904, GMT. This is slightly different from HFS, where the

value represents local time.

The maximum representable date is February 6, 2040 at

06:28:15 GMT.

The date values do not account for leap seconds. They do

include a leap day in every year that is evenly divisible by

four. This is sufficient given that the range of

representable dates does not contain 1900 or 2100, neither

of which have leap days.

The implementation is responsible for converting these

times to the format expected by client software. For

example, the Mac OS File Manager passes dates in local time;

the Mac OS HFS Plus implementation converts dates between

local time and GMT as appropriate.

|

Note:

The creation date stored in

the Volume Header is NOT stored in GMT; it is

stored in local time. The reason for this is that

many applications (including backup utilities) use

the volume's creation date as a relatively unique

identifier. If the date was stored in GMT, and

automatically converted to local time by an

implementation (like Mac OS), the value would

appear to change when the local time zone or

daylight savings time settings change (and thus

cause some applications to improperly identify the

volume). The use of the volume's creation date as a

unique identifier outweighs its use as a date. This

change was introduced late in the Mac OS 8.1

project.

|

HFS Plus Permissions

For each file and folder, HFS Plus maintains a record

containing access permissions, defined by the

HFSPlusBSDInfo structure.

struct HFSPlusBSDInfo {

UInt32 ownerID;

UInt32 groupID;

UInt8 adminFlags;

UInt8 ownerFlags;

UInt16 fileMode;

union {

UInt32 iNodeNum;

UInt32 linkCount;

UInt32 rawDevice;

} special;

};

typedef struct HFSPlusBSDInfo HFSPlusBSDInfo;

|

The fields have the following meaning:

ownerID- The Mac OS X user ID of the owner of the file or folder.

Mac OS X versions prior to 10.3 treats user ID 99 as if it was the user ID of the

user currently logged in to the console. If no user is logged in to the

console, user ID 99 is treated as user ID 0 (root). Mac OS X version 10.3

treats user ID 99 as if it was the user ID of the process making the call

(in effect, making it owned by everyone simultaneously). These substitutions

happen at run time. The actual user ID on disk is not changed.

groupID- The Mac OS X group ID of the group associated with the

file or folder. Mac OS X typically maps group ID 99 to the group

named "unknown." There is no run time substitution of group IDs in Mac OS X.

adminFlags- BSD flags settable by the super-user only. This field

corresponds to bits 16 through 23 of the

st_flags field of

struct stat in Mac OS X. See the

manual page for chflags(2) for more information. The following table

gives the bit position in the adminFlags field and the name of the

corresponding mask used in the st_flags field.

| Bit | st_flags mask | Meaning |

|---|

| 0 | SF_ARCHIVED | File has been archived |

| 1 | SF_IMMUTABLE | File may not be changed |

| 2 | SF_APPEND | Writes to file may only append |

ownerFlags- BSD flags settable by the owner of the file or directory,

or by the super-user. This field corresponds to bits 0 through 7 of the

st_flags field of

struct stat in Mac OS X. See the

manual page for chflags(2) for more information. The following table

gives the bit position in the ownerFlags field and the name of the

corresponding mask used in the st_flags field.

| Bit | st_flags mask | Meaning |

|---|

| 0 | UF_NODUMP | Do not dump (back up or archive) this file |

| 1 | UF_IMMUTABLE | File may not be changed |

| 2 | UF_APPEND | Writes to file may only append |

| 3 | UF_OPAQUE | Directory is opaque (see below) |

fileMode- BSD file type and mode bits. Note that the constants from the header

shown below are in octal (base eight), not hexadecimal.

#define S_ISUID 0004000 /* set user id on execution */

#define S_ISGID 0002000 /* set group id on execution */

#define S_ISTXT 0001000 /* sticky bit */

#define S_IRWXU 0000700 /* RWX mask for owner */

#define S_IRUSR 0000400 /* R for owner */

#define S_IWUSR 0000200 /* W for owner */

#define S_IXUSR 0000100 /* X for owner */

#define S_IRWXG 0000070 /* RWX mask for group */

#define S_IRGRP 0000040 /* R for group */

#define S_IWGRP 0000020 /* W for group */

#define S_IXGRP 0000010 /* X for group */

#define S_IRWXO 0000007 /* RWX mask for other */

#define S_IROTH 0000004 /* R for other */

#define S_IWOTH 0000002 /* W for other */

#define S_IXOTH 0000001 /* X for other */

#define S_IFMT 0170000 /* type of file mask */

#define S_IFIFO 0010000 /* named pipe (fifo) */

#define S_IFCHR 0020000 /* character special */

#define S_IFDIR 0040000 /* directory */

#define S_IFBLK 0060000 /* block special */

#define S_IFREG 0100000 /* regular */

#define S_IFLNK 0120000 /* symbolic link */

#define S_IFSOCK 0140000 /* socket */

#define S_IFWHT 0160000 /* whiteout */

|

In some versions of Unix, the sticky bit, S_ISTXT, is used

to indicate that an executable file's code should remain in memory

after the executable finishes; this can help performance if the same

executable is used again soon. Mac OS X does not use this optimization.

If the sticky bit is set for a directory, then Mac OS X restricts

movement, deletion, and renaming of files in that directory.

Files may be removed or renamed only if the user has write access

to the directory; and is the owner of the file or the directory,

or is the super-user.

special- This field is used only for certain special kinds of files.

For directories, and most files, this field is unused and

reserved. When used,

this field is used as one of the following:

iNodeNum- For hard link files, this field contains the link reference number.

See the Hard Links section for more

information.

linkCount- For indirect node files, this field contains the number of hard links

that point at this indirect node file. See the

Hard Links section for more information.

rawDevice- For block and character special devices files (when the

S_IFMT

field contains S_IFCHR or S_IFBLK), this field

contains the device number.

|

WARNING:

Mac OS 8 and 9 treat the permissions as

reserved.

|

|

Note:

The S_IFWHT and UF_OPAQUE

values are used when the file system is mounted as part

of a union mount. A union mount presents the

combination (union) of several file systems as a single

file system. Conceptually, these file systems are

layered, one on top of another. If a file or directory

appears in multiple layers, the one in the top most

layer is used. All changes are made to the top most

file system only; the others are read-only. To delete a

file or directory that appears in a layer other than the

top layer, a whiteout entry (file type

S_IFWHT) is created in the top layer. If a

directory that appears in a layer other than the top

layer is deleted and later recreated, the contents in

the lower layer must be hidden by setting the

UF_OPAQUE flag in the directory in the top

layer. Both S_IFWHT and

UF_OPAQUE hide corresponding names in lower

layers by preventing a union mount from accessing the

same file or directory name in a lower layer.

|

|

Note:

If the S_IFMT field (upper 4 bits) of the fileMode

field is zero, then Mac OS X assumes that the permissions structure is

uninitialized, and internally uses default values for all of the fields.

The default user and group IDs are 99, but can be changed at the time the

volume is mounted. This default ownerID is then subject to

substitution as described above.

This means that files created by Mac OS 8 and 9, or any other implementation

that sets the permissions fields to zeroes, will behave as if the

"ignore ownership" option is enabled for those files, even if "ignore

ownership" is disabled for the volume as a whole.

|

Fork Data Structure

HFS Plus maintains information about the contents of a

file using the HFSPlusForkData structure. Two

such structures -- one for the resource and one for the data

fork -- are stored in the catalog record for each user file.

In addition, the volume header contains a fork data

structure for each special file.

An unused extent descriptor in an extent record would

have both startBlock and

blockCount set to zero. For example, if a given

fork occupied three extents, then the last five extent

descriptors would be all zeroes.

struct HFSPlusForkData {

UInt64 logicalSize;

UInt32 clumpSize;

UInt32 totalBlocks;

HFSPlusExtentRecord extents;

};

typedef struct HFSPlusForkData HFSPlusForkData;

typedef HFSPlusExtentDescriptor HFSPlusExtentRecord[8];

|

The fields have the following meaning:

logicalSize- The size, in bytes, of the valid data in the fork.

clumpSize- For

HFSPlusForkData structures in the

volume header, this is the fork's

clump size, which is used in preference to the

default clump size in the volume header.

For HFSPlusForkData structures in a

catalog record, this field was intended to store a per-fork

clump size to override the default clump size

in the volume header. However, Apple

implementations prior to Mac OS X version 10.3 ignored this field.

As of Mac OS X version 10.3, this field is used to keep track of the

number of blocks actually read from the fork. See the Hot

Files section for more information.

totalBlocks- The total number of allocation blocks used by all the

extents in this fork.

extents- An array of extent descriptors for the fork. This

array holds the first eight extent descriptors. If more

extent descriptors are required, they are stored in the

extents overflow file.

|

IMPORTANT:

The HFSPlusExtentRecord is also the

data record used in the

extents overflow

file (the extent record).

|

The HFSPlusExtentDescriptor structure is

used to hold information about a specific extent.

struct HFSPlusExtentDescriptor {

UInt32 startBlock;

UInt32 blockCount;

};

typedef struct HFSPlusExtentDescriptor HFSPlusExtentDescriptor;

|

The fields have the following meaning:

startBlock- The first allocation block in the extent.

blockCount- The length, in allocation blocks, of the extent.

Back to top

Volume Header

Each HFS Plus volume contains a volume header

1024 bytes from the start of the volume. The volume

header -- analogous to the master directory block (MDB)

for HFS -- contains information about the volume as a whole,

including the location of other key structures in the volume.

The implementation is responsible for ensuring that this

structure is updated before the volume is unmounted.

A copy of the volume header, the alternate volume header,

is stored starting 1024 bytes before the end of the volume. The

implementation should only update this copy when the length

or location of one of the special files changes. The alternate volume

header is intended for use solely by disk repair utilities.

The first 1024 bytes and the

last 512 bytes of the volume are

reserved.

|

Note:

The first 1024 bytes are reserved for use as boot

blocks; the traditional Mac OS Finder will write to them when

the System Folder changes. The boot block format is

outside the scope of this specification. It is

defined in

Inside

Macintosh: Files.

The last 512 bytes were used during Apple's CPU

manufacturing process.

|

The allocation block (or blocks)

containing the first 1536 bytes (reserved space plus volume header)

are marked as used in the allocation file (see the

Allocation File section).

Also, in order to accommodate the alternate volume header and

the reserved space following it, the last allocation block (or two

allocation blocks, if the volume is formatted with 512-byte

allocation blocks) is also marked as used in the allocation

file.

|

IMPORTANT:

The alternate volume header is always stored at offset

1024 bytes from the end of the volume. If the

disk size is not an even multiple of the allocation

block size, this area may lie beyond the last

allocation block. However, the last allocation

block (or two allocation blocks for a volume

formatted with 512-byte allocation blocks) is still

reserved even if the alternate volume header is not

stored there.

|

The volume header is described by the

HFSPlusVolumeHeader type.

struct HFSPlusVolumeHeader {

UInt16 signature;

UInt16 version;

UInt32 attributes;

UInt32 lastMountedVersion;

UInt32 journalInfoBlock;

UInt32 createDate;

UInt32 modifyDate;

UInt32 backupDate;

UInt32 checkedDate;

UInt32 fileCount;

UInt32 folderCount;

UInt32 blockSize;

UInt32 totalBlocks;

UInt32 freeBlocks;

UInt32 nextAllocation;

UInt32 rsrcClumpSize;

UInt32 dataClumpSize;

HFSCatalogNodeID nextCatalogID;

UInt32 writeCount;

UInt64 encodingsBitmap;

UInt32 finderInfo[8];

HFSPlusForkData allocationFile;

HFSPlusForkData extentsFile;

HFSPlusForkData catalogFile;

HFSPlusForkData attributesFile;

HFSPlusForkData startupFile;

};

typedef struct HFSPlusVolumeHeader HFSPlusVolumeHeader;

|

The fields have the following meaning:

signature- The volume signature, which must be

kHFSPlusSigWord ('H+') for an

HFS Plus volume, or kHFSXSigWord ('HX')

for an HFSX volume.

version- The version of the volume format, which is currently

4 (

kHFSPlusVersion) for HFS Plus volumes, or

5 (kHFSXVersion) for HFSX

volumes.

attributes- Volume attributes, as

described below.

lastMountedVersion- A value which uniquely identifies the implementation

that last mounted this volume for writing. This value can

be used by future implementations to detect volumes that

were last mounted by older implementations and check them

for deficiencies. Any code which modifies the on disk

structures must also set this field to a unique value which

identifies that code. Third-party implementations of HFS

Plus should place a

registered creator

code in this field. The value used by Mac OS 8.1 to

9.2.2 is

'8.10'.

The value used by Mac OS X is '10.0'. The

value used by a journaled volume

(including HFSX) in Mac OS X is 'HFSJ'.

The value used by fsck_hfs on Mac OS X is 'fsck'.

|

Note:

It is very important for implementations (and

utilities that directly modify the volume!) to set

the lastMountedVersion. It is also

important to choose different values when

non-trivial changes are made to an implementation

or utility. If a bug is found in an implementation

or utility, and it sets the

lastMountedVersion correctly, it will

be much easier for other implementations and

utilities to detect and correct any problems.

|

journalInfoBlock- The allocation block number of the allocation block

which contains the

JournalInfoBlock

for this volume's journal. This field is valid only if bit

kHFSVolumeJournaledBit is set in the

attribute field; otherwise, this field is

reserved.

createDate- The date and time when the volume was created. See

HFS Plus Dates for a

description of the format.

modifyDate- The date and time when the volume was last modified.

See HFS Plus Dates for a

description of the format.

backupDate- The date and time when the volume was last backed up.

The volume format requires no special action on this

field; it simply defines the field for the benefit of

user programs. See HFS Plus

Dates for a description of the format.

checkedDate- The date and time when the volume was last checked

for consistency. Disk checking tools, such as Disk First

Aid, must set this when they perform a disk check. A disk

checking tool may use this date to perform periodic

checking of a volume.

fileCount- The total number of files on the volume. The

fileCount field does not include the special

files. It should equal the number of file records found

in the catalog file.

folderCount- The total number of folders on the volume.

The

folderCount field does not include the

root folder. It should equal the number of folder records

in the catalog file, minus one (since the root folder has

a folder record in the catalog file).

blockSize- The allocation block size, in bytes.

totalBlocks- The total number of allocation blocks on the disk.

For a disk whose size is an even

multiple of the allocation block size, all areas

on the disk are included in an allocation block,

including the volume header and alternate volume header.

For a disk whose size is not an

even multiple of the allocation block size, only the

allocation blocks that will fit entirely on the disk are

counted here. The remaining space at the end of the

disk is not used by the volume format (except for storing

the alternate volume header, as described above).

freeBlocks- The total number of unused allocation blocks on the

disk.

nextAllocation- Start of next allocation search. The

nextAllocation field is used by Mac OS as a

hint for where to start searching for free allocation blocks

when allocating space for a file. It contains the allocation

block number where the search should begin. An

implementation that doesn't want to use this kind of hint

can just treat the field as reserved. [Implementation

details: traditional Mac OS implementations typically

set it to the first allocation block of the extent most

recently allocated. It is not set to the allocation block

immediately following the most recently allocated extent

because of the likelihood of that extent being shortened

when the file is closed (since a whole clump may have been allocated but not

actually used).] See Allocation

File section for details.

rsrcClumpSize- The default clump

size for resource forks, in bytes. This is a hint to the

implementation as to the size by which a growing file should

be extended. All Apple implementations to date ignore the

rsrcClumpSize and use

dataClumpSize for both data and resource

forks.

dataClumpSize- The default clump

size for data forks, in bytes. This is a hint to the

implementation as to the size by which a growing file should

be extended. All Apple implementations to date ignore the

rsrcClumpSize and use

dataClumpSize for both data and resource

forks.

nextCatalogID- The next unused catalog ID. See

Catalog File for a description

of catalog IDs.

writeCount- This field is incremented every time a volume is

mounted. This allows an implementation to keep the volume

mounted even when the media is ejected (or otherwise

inaccessible). When the media is re-inserted, the

implementation can check this field to determine when the

media has been changed while it was ejected. It is very

important that an implementation or utility change the

writeCount field if it modifies the volume's

structures directly. This is particularly important if it

adds or deletes items on the volume.

encodingsBitmap- This field keeps track of the text encodings used in

the file and folder names on the volume. This bitmap

enables some performance optimizations for

implementations that don't use Unicode names directly.

See the Text Encoding

sections for details.

finderInfo-

This array of 32-bit items contains information used by the Mac OS

Finder, and the system software boot process.

finderInfo[0] contains the directory ID of the

directory containing the bootable system (for example, the

System Folder in Mac OS 8 or 9, or /System/Library/CoreServices

in Mac OS X). It is zero if there is no bootable system on the volume.

This value is typically equal to either finderInfo[3]

or finderInfo[5].

finderInfo[1] contains the parent directory ID of

the startup application (for example, Finder), or zero if the volume

is not bootable.

finderInfo[2] contains the directory ID of a directory

whose window should be displayed in the Finder when the volume is

mounted, or zero if no directory window should be opened. In

traditional Mac OS, this is the first in a linked list of windows

to open; the frOpenChain field of the directory's

Finder Info contains the next directory ID

in the list. The open window list is deprecated. The Mac OS X

Finder will open this directory's window, but ignores the rest

of the open window list. The Mac OS X Finder does not modify

this field.

finderInfo[3] contains the directory ID of a bootable

Mac OS 8 or 9 System Folder, or zero if there isn't one.

finderInfo[4] is reserved.

finderInfo[5] contains the directory ID of a bootable

Mac OS X system (the /System/Library/CoreServices

directory), or zero if there is no bootable Mac OS X system on

the volume.

finderInfo[6] and finderInfo[7] are

used by Mac OS X to contain a 64-bit unique volume identifier.

One use of this identifier is for tracking whether a given

volume's ownership (user ID) information should be honored.

These elements may be zero if no such identifier has been

created for the volume.

allocationFile- Information about the location and size of the

allocation file. See Fork

Data Structure for a description of the

HFSPlusForkData type.

extentsFile- Information about the location and size of the

extents file. See Fork Data

Structure for a description of the

HFSPlusForkData type.

catalogFile- Information about the location and size of the

catalog file. See Fork Data

Structure for a description of the

HFSPlusForkData type.

attributesFile- Information about the location and size of the

attributes file. See Fork

Data Structure for a description of the

HFSPlusForkData type.

startupFile- Information about the location and size of the

startup file. See Fork Data

Structure for a description of the

HFSPlusForkData type.

Volume Attributes

The attributes field of a volume header is

treated as a set of one-bit flags. The definition of the

bits is given by the constants listed below.

enum {

/* Bits 0-6 are reserved */

kHFSVolumeHardwareLockBit = 7,

kHFSVolumeUnmountedBit = 8,

kHFSVolumeSparedBlocksBit = 9,

kHFSVolumeNoCacheRequiredBit = 10,

kHFSBootVolumeInconsistentBit = 11,

kHFSCatalogNodeIDsReusedBit = 12,

kHFSVolumeJournaledBit = 13,

/* Bit 14 is reserved */

kHFSVolumeSoftwareLockBit = 15

/* Bits 16-31 are reserved */

};

|

The bits have the following meaning:

- bits 0-7

- An implementation must treat these as

reserved fields.

kHFSVolumeUnmountedBit (bit 8)- This bit is set if the volume was correctly flushed

before being unmounted or ejected. An implementation must

clear this bit on the media when it mounts a volume for

writing. An implementation must set this bit on the media

as the last step of unmounting a writable volume, after

all other volume information has been flushed. If an

implementation is asked to mount a volume where this bit

is clear, it must assume the volume is inconsistent, and

do appropriate

consistency

checking before using the volume.

kHFSVolumeSparedBlocksBit (bit 9)- This bit is set if there are any records in the

extents overflow file for bad blocks (belonging to file

ID

kHFSBadBlockFileID). See

Bad Block File for details.

kHFSVolumeNoCacheRequiredBit (bit 10)- This bit is set if the blocks from this volume should

not be cached. For example, a RAM or ROM disk is actually

stored in memory, so using additional memory to cache the

volume's contents would be wasteful.

kHFSBootVolumeInconsistentBit (bit 11)- This bit is similar to

kHFSVolumeUnmountedBit, but inverted in

meaning. An implementation must set this bit on the media

when it mounts a volume for writing. An implementation

must clear this bit on the media as the last step of

unmounting a writable volume, after all other volume

information has been flushed. If an implementation is

asked to mount a volume where this bit is set, it must

assume the volume is inconsistent, and do appropriate

consistency

checking before using the volume.

kHFSCatalogNodeIDsReusedBit (bit 12)- This bit is set when the

nextCatalogID

field overflows 32 bits, forcing smaller catalog node IDs to be reused. When this

bit is set, it is common (and not an error) for catalog

records to exist with IDs greater than or equal to

nextCatalogID. If this bit is set, you must

ensure that IDs assigned to newly created catalog records do

not conflict with IDs used by existing records.

kHFSVolumeJournaledBit (bit 13)- If this bit is set, the volume has a

journal, which can be located using the

journalInfoBlock

field of the Volume Header.

- bit 14

- An implementation must treat this bit as

reserved.

kHFSVolumeSoftwareLockBit (bit 15)- This bit is set if the volume is write-protected due

to a software setting. Any implementations must refuse to

write to a volume with this bit set. This flag is

especially useful for write-protecting a volume on a

media that cannot be write-protected otherwise, or for

protecting an individual partition on a partitioned

device.

- bits 16-31

- An implementation must treat these bits as

reserved.

|

Note:

Mac OS X versions 10.0 to 10.3 don't properly honor

kHFSVolumeSoftwareLockBit. They incorrectly

allow such volumes to be modified. This bug is expected

to be fixed in a future version of Mac OS X. (r. 3507614)

|

|

Note:

An implementation may keep a copy of the attributes

in memory and use bits 0-7 for its own runtime

flags. As an example, Mac OS uses bit 7,

kHFSVolumeHardwareLockBit, to indicate

that the volume is write-protected due to some

hardware setting.

|

|

Note:

The existence of two volume consistency bits

(kHFSVolumeUnmountedBit and

kHFSBootVolumeInconsistentBit)

deserves an explanation. Macintosh ROMs check the

consistency of a boot volume if

kHFSVolumeUnmountedBit is clear. The

ROM-based check is very slow, annoyingly so. This

checking code was significantly optimized in Mac OS

7.6. To prevent the ROM check from being used, Mac

OS 7.6 (and higher) leaves the original consistency

check bit (kHFSVolumeUnmountedBit) set

at all times. Instead, an alternative flag

(kHFSBootVolumeInconsistentBit) is

used to signal that the disk needs a consistency

check.

|

|

Note:

For the boot volume, the

kHFSBootVolumeInconsistentBit should

be used as described but

kHFSVolumeUnmountedBit should remain

set; for all other volumes, use the

kHFSVolumeUnmountedBit as described

but keep the

kHFSBootVolumeInconsistentBit clear.

This is an optimization that prevents the Mac OS

ROM from doing a very slow consistency check when

the boot volume is mounted since it only checks

kHFSVolumeUnmountedBit, and won't do a

consistency check; later on, the File Manager will

see the kHFSBootVolumeInconsistentBit

set and do a better, faster consistency check. (It

would be OK to always use both bits at the expense

of a slower Mac OS boot.)

|

Back to top

B-Trees

|

Note:

For a practical description of the algorithms used

to maintain a B-tree, seeAlgorithms in

C, Robert Sedgewick, Addison-Wesley, 1992.

ISBN: 0201514257.

Many textbooks describe B-trees in which an

index node contains N keys and N+1 pointers, and

where keys less than key #X lie in the subtree

pointed to by pointer #X, and keys greater than key

#X lie in the subtree pointed to by pointer #X+1.

(The B-tree implementor defines whether to use

pointer #X or #X+1 for equal keys.)

HFS and HFS Plus are slightly different; in a

given subtree, there are no keys less than the

first key of that subtree's root node.

|

This section describes the B-tree structure used for the

catalog, extents overflow, and attributes files. A B-tree is

stored in file data fork. Each B-tree has a

HFSPlusForkData

structure in the volume header that describes the size and

initial extents of that data fork.

|

Note:

Special files do not have a resource fork because

there is no place to store its

HFSPlusForkData in the volume header.

However, it's still important that the B-tree is in

the data fork because the fork is part of the key

used to store B-tree extents in the extents

overflow file.

|

A B-tree file is divided up into fixed-size nodes,

each of which contains records, which consist of a

key and some data. The purpose of the B-tree is to

efficiently map a key into its corresponding data. To

achieve this, keys must be ordered, that is, there must be a

well-defined way to decide whether one key is smaller than,

equal to, or larger than another key.

The node size (which is expressed in bytes) must

be power of two, from 512 through 32,768, inclusive. The

node size of a B-tree is determined when the B-tree is

created. The logical length of a B-tree file is just the

number of nodes times the node size.

There are four kinds of nodes.

- Each B-tree contains a single header node. The

header node is always the first node in the B-tree. It

contains the information needed to find other any other

node in the tree.

- Map nodes contain map records, which

hold any allocation data (a bitmap that describes the

free nodes in the B-tree) that overflows the map record

in the header node.

- Index nodes hold pointer records that

determine the structure of the B-tree.

- Leaf nodes hold data records that

contain the data associated with a given key. The key for

each data record must be unique.

All nodes share a common structure, described in the next

section.

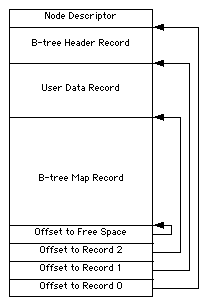

Node Structure

Nodes are indicated by number. The node's number can be

calculated by dividing its offset into the file by the node

size. Each node has the same general structure, consisting

of three main parts: a node descriptor at the beginning of

the node, a list of record offsets at the end of the node,

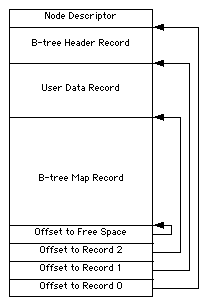

and a list of records. This structure is depicted in Figure

2.

Figure 2. The structure of a node.

The node descriptor contains basic information

about the node as well as forward and backward links to

other nodes. The BTNodeDescriptor data type

describes this structure.

struct BTNodeDescriptor {

UInt32 fLink;

UInt32 bLink;

SInt8 kind;

UInt8 height;

UInt16 numRecords;

UInt16 reserved;

};

typedef struct BTNodeDescriptor BTNodeDescriptor;

|

The fields have the following meaning:

fLink- The node number of the next node of this type, or 0

if this is the last node.

bLink- The node number of the previous node of this type, or

0 if this is the first node.

kind- The type of this node. There are four node kinds,

defined by the constants listed below.

height- The level, or depth, of this node in the B-tree

hierarchy. For the header node, this field must be zero.

For leaf nodes, this field must be one. For index nodes,

this field is one greater than the height of the child

nodes it points to. The height of a map node is zero,

just like for a header node. (Think of map nodes as

extensions of the map record in the header node.)

numRecords- The number of records contained in this node.

reserved- An implementation must treat this as a

reserved field.

A node descriptor is always 14 (which is

sizeof(BTNodeDescriptor)) bytes long, so the

list of records contained in a node always starts 14

bytes from the start of the node. The size of each record

can vary, depending on the record's type and the amount of

information it contains.

The records are accessed using the list of record

offsets at the end of the node. Each entry in this list

is a UInt16 which contains the offset, in

bytes, from the start of the node to the start of the

record. The offsets are stored in reverse order, with the

offset for the first record in the last two bytes of the

node, the offset for the second record is in the previous

two bytes, and so on. Since the first record is always at

offset 14, the last two bytes of the node contain the value

14.

|

IMPORTANT:

The list of record offsets always contains one more

entry than there is records in the node. This entry

contains the offset to the first byte of free space

in the node, and thus indicates the size of the

last record in the node. If there is no free space

in the node, the entry contains its own byte offset

from the start of the node.

|

The kind field of the node descriptor

describes the type of a node, which indicates what kinds of

records it contains and, therefore, its purpose in the

B-tree hierarchy. There are four kinds of node types given

by the following constants:

enum {

kBTLeafNode = -1,

kBTIndexNode = 0,

kBTHeaderNode = 1,

kBTMapNode = 2

};

|

It's important to realise that the B-tree node type

determines the type of records found in the node. Leaf nodes

always contain data records. Index nodes always contain

pointer records. Map nodes always contain map records. The

header node always contains a header record, a reserved

record, and a map record. The four node types and their

corresponding records are described in the subsequent

sections.

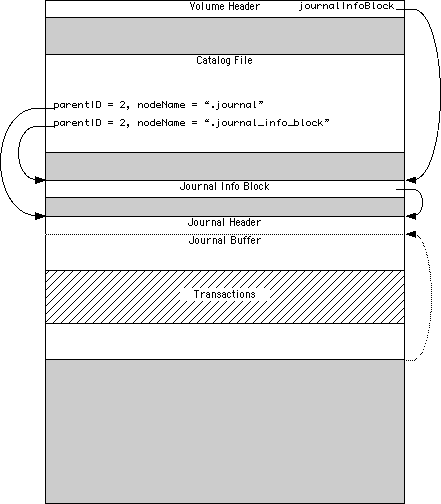

Header Nodes

The first node (node 0) in every B-tree file

is a header node, which contains essential information about

the entire B-tree file. There are three records in the header

node. The first record is the B-tree header record. The second

record is the user data record and is always 128 bytes long.

The last record is the B-tree map record; it occupies all of

the remaining space between the user data record and the record

offsets. The header node is shown in Figure 3.

Figure 3 Header node structure

The fLink field of the header node's node

descriptor contains the node number of the first map node,

or 0 if there are no map nodes. The bLink field

of the header node's node descriptor must be set to zero.

Header Record

The B-tree header record contains general

information about the B-tree such as its size, maximum key

length, and the location of the first and last leaf nodes.

The data type BTHeaderRec describes the

structure of a header record.

struct BTHeaderRec {

UInt16 treeDepth;

UInt32 rootNode;

UInt32 leafRecords;

UInt32 firstLeafNode;

UInt32 lastLeafNode;

UInt16 nodeSize;

UInt16 maxKeyLength;

UInt32 totalNodes;

UInt32 freeNodes;

UInt16 reserved1;

UInt32 clumpSize; // misaligned

UInt8 btreeType;

UInt8 keyCompareType;

UInt32 attributes; // long aligned again

UInt32 reserved3[16];

};

typedef struct BTHeaderRec BTHeaderRec;

|

|

Note:

The root node can be a leaf node (in the case where

there is only a single leaf node, and therefore no

index nodes, as might happen with the catalog file

on a newly initialized volume). If a tree has no

leaf nodes (like the extents overflow file on a

newly initialized volume), the

firstLeafNode,

lastLeafNode, and

rootNode fields will all be zero. If

there is only one leaf node (as may be the case

with the catalog file on a newly initialized

volume), firstLeafNode,

lastLeafNode, and

rootNode will all have the same value

(i.e., the node number of the sole leaf node). The

firstLeafNode and

lastLeafNode fields just make it easy

to walk through all the leaf nodes by just

following fLink/bLink fields.

|

The fields have the following meaning:

treeDepth- The current depth of the B-tree. Always equal to the

height field of the root node.

rootNode- The node number of the root node, the index node that

acts as the root of the B-tree. See

Index Nodes for details. There

is a possibility that the

rootNode is a leaf

node. See Inside

Macintosh: Files, pp. 2-69 for details.

leafRecords- The total number of records contained in all of the

leaf nodes.

firstLeafNode- The node number of the first leaf node. This may be

zero if there are no leaf nodes.

lastLeafNode- The node number of the last leaf node. This may be

zero if there are no leaf nodes.

nodeSize- The size, in bytes, of a node. This is a power of

two, from 512 through 32,768, inclusive.

maxKeyLength- The maximum length of a key in an index or leaf node.

HFSVolumes.h has the

maxKeyLength values for

the catalog and extents files for both HFS and HFS Plus

(kHFSPlusExtentKeyMaximumLength,

kHFSExtentKeyMaximumLength,

kHFSPlusCatalogKeyMaximumLength,

kHFSCatalogKeyMaximumLength). The maximum

key length for the attributes B-tree will probably be a

little larger than for the catalog file. In general,

maxKeyLength has to be small enough

(compared to nodeSize) so that a single node

can fit two keys of maximum size plus the node descriptor

and offsets.

totalNodes- The total number of nodes (be they free or used) in

the B-tree. The length of the B-tree file is this value

times the

nodeSize.

freeNodes- The number of unused nodes in the B-tree.

reserved1- An implementation must treat this as a

reserved field.

clumpSize- Ignored for HFS Plus B-trees. The

clumpSize field of the

HFSPlusForkData

record is used instead. For maximum compatibility, an

implementation should probably set the

clumpSize in the node descriptor to the same

value as the clumpSize in the

HFSPlusForkData when initializing a volume.

Otherwise, it should treat the header records's

clumpSize as reserved.

btreeType- The value stored in this field is of type

BTreeTypes:

enum BTreeTypes{

kHFSBTreeType = 0, // control file

kUserBTreeType = 128, // user btree type starts from 128

kReservedBTreeType = 255

};

|

This field must be equal to kHFSBTreeType

for the catalog, extents, and attributes B-trees. This field

must be equal to kUserBTreeType for the hot file B-tree. Historically, values of

1 to 127 and kReservedBTreeType were used in

B-trees used by system software in Mac OS 9 and earlier.

keyCompareType- For HFSX volumes, this field in the

catalog B-tree header defines the ordering of the keys (whether

the volume is case-sensitive or case-insensitive). In all

other cases, an implementation must treat this as a

reserved field.

| Constant name | Value | Meaning |

|---|

kHFSCaseFolding | 0xCF | Case folding (case-insensitive) |

kHFSBinaryCompare | 0xBC | Binary compare (case-sensitive) |

attributes- A set of bits used to describe various attributes of

the B-tree. The meaning of these bits is given below.

reserved3- An implementation must treat this as a

reserved field.

The following constants define the various bits that may

be set in the attributes field of the header

record.

enum {

kBTBadCloseMask = 0x00000001,

kBTBigKeysMask = 0x00000002,

kBTVariableIndexKeysMask = 0x00000004

};

|

The bits have the following meaning:

kBTBadCloseMask- This bit indicates that the B-tree was not closed

properly and should be checked for consistency. This bit

is not used for HFS Plus B-trees. An implementation must

treat this as

reserved.

kBTBigKeysMask- If this bit is set, the

keyLength field

of the keys in index and leaf nodes is

UInt16; otherwise, it is a

UInt8. This bit must be set for all HFS Plus

B-trees.

kBTVariableIndexKeysMask- If this bit is set, the keys in index nodes occupy

the number of bytes indicated by their

keyLength field; otherwise, the keys in

index nodes always occupy maxKeyLength

bytes. This bit must be set for the HFS Plus Catalog

B-tree, and cleared for the HFS Plus Extents B-tree.

Bits not specified here must be treated as

reserved.

User Data Record

The second record in a header node is always 128 bytes long.

It provides a small space to store information associated with

a B-tree.

In the HFS Plus catalog, extents, and attributes B-trees, this record is

unused and reserved. In

the HFS Plus hot file B-tree, this

record contains general information about the hot file

recording process.

Map Record

The remaining space in the header node is occupied by a

third record, the map record. It is a bitmap that

indicates which nodes in the B-tree are used and which are

free. The bits are interpreted in the same way as the bits

in the allocation file.

All tolled, the node descriptor, header record, reserved

record, and record offsets occupy 256 bytes of the header

node. So the size of the map record (in bytes) is

nodeSize minus 256. If there are more nodes in

the B-tree than can be represented by the map record in the

header node, map nodes are used to store additional

allocation data.

Map Nodes

If the map record of the header node is not large enough

to represent all of the nodes in the B-tree, map

nodes are used to store the remaining allocation data.

In this case, the fLink field of the header

node's node descriptor contains the node number of the first

map node.

A map node consists of the node descriptor and a single

map record. The map record is a continuation of the map

record contained in the header node. The size of the map

record is the size of the node, minus the size of the node

descriptor (14 bytes), minus the size of two offsets (4

bytes), minus two bytes of free space. That is, the size of

the map record is the size of the node minus 20 bytes; this

keeps the length of the map record an even multiple of 4

bytes. Note that the start of the map record is not

aligned to a 4-byte boundary: it starts immediately after

the node descriptor (at an offset of 14 bytes).

The B-tree uses as many map nodes as needed to provide

allocation data for all of the nodes in the B-tree. The map

nodes are chained through the fLink fields of

their node descriptors, starting with the header node. The

fLink field of the last map node's node

descriptor is zero. The bLink field is not used

for map nodes and must be set to zero for all map nodes.

|

Note:

Not using the bLink field is

consistent with the HFS volume format, but not

really consistent with the overall design.

|

Keyed Records

The records in index and leaf nodes share a common

structure. They contain a keyLength, followed

by the key itself, followed by the record data.

The first part of the record, keyLength, is

either a UInt8 or a UInt16,

depending on the attributes field in the

B-tree's header record. If the kBTBigKeysMask

bit is set in attributes, the

keyLength is a UInt16; otherwise,

it's a UInt8. The length of the key, as stored

in this field, does not include the size of the

keyLength field itself.

|

IMPORTANT:

All HFS Plus B-trees use a UInt16 for

their key length.

|

Immediately following the keyLength is the

key itself. The length of the key is determined by the node

type and the B-tree attributes. In leaf nodes, the length is

always determined by keyLength. In index nodes,

the length depends on the value of the

kBTVariableIndexKeysMask bit in the B-tree

attributes in the header record.

If the bit is clear, the key occupies a constant number of

bytes, determined by the maxKeyLength field of

the B-tree header record. If the bit is set, the key length

is determined by the keyLength field of the

keyed record.

Following the key is the record's data. The format of

this data depends on the node type, as explained in the next

two sections. However, the data is always aligned on a

two-byte boundary and occupies an even number of bytes. To

meet the first alignment requirement, a pad byte must be

inserted between the key and the data if the size of the

keyLength field plus the size of the key is

odd. To meet the second alignment requirement, a pad byte

must be added after the data if the data size is odd.

Index Nodes

The records in an index node are called pointer

records. They contain a keyLength, a key,

and a node number, expressed a UInt32. The node

whose number is in a pointer record is called a child

node of the index node. An index node has two or more

children, depending on the size of the node and the size of

the keys in the node.

|

Note:

A root node does not need to exist (if the tree is

empty). And even if one does exist, it need not

be an index node (i.e., it could be a leaf node

if all the records fit in a single node).

|

Leaf Nodes

The bottom level of a B-tree is occupied exclusively by

leaf nodes, which contain data records instead

of pointer records. The data records contain a

keyLength, a key, and the data associated with

that key. The data may be of variable length.

In an HFS Plus B-tree, the data in the data record is the

HFS Plus volume structure (such as a

CatalogRecord, ExtentRecord, or

AttributeRecord) associated with the key.

Searching for

Keyed Records

A B-tree is highly structured to allow for efficient

searching, insertion, and removal. This structure primarily

affects the keyed records (pointer records and data records)

and the nodes in which they are stored (index nodes and leaf

nodes). The following are the ordering requirements for

index and leaf nodes:

- Keyed records must be placed in a node such that