Core Audio Programming Interfaces

Core Audio is a comprehensive set of services for handling all audio tasks in Mac OS X, and as such it contains many constituent parts. This chapter describes the various programming interfaces of Core Audio.

For the purposes of this document, an API refers to a programming interface defined by a single header file, while a service is an interface defined by several header files.

For a complete list of Core Audio frameworks and the headers they contain, see “Core Audio Frameworks.”

In this section:

Audio Unit Services

Audio Processing Graph API

Audio File and Converter Services

Hardware Abstraction Layer (HAL) Services

Music Player API

Core MIDI Services and MIDI Server Services

Core Audio Clock API

OpenAL (Open Audio Library)

System Sound API

Audio Unit Services

Audio Unit Services allows you to create and manipulate audio units. This interface consists of the functions, data types, and constants found in the following header files in AudioUnit.framework and AudioToolbox.framework:

AudioUnit.hAUComponent.hAudioOutputUnit.hAudioUnitParameters.hAudioUnitProperties.hAudioUnitCarbonView.hAUCocoaUIView.hMusicDevice.hAudioUnitUtilities.h(inAudioToolbox.framework)

Audio units are plug-ins, specifically Component Manager components, for handling or generating audio signals. Multiple instances of the same audio unit can appear in the same host application. They can appear virtually anywhere in an audio signal chain.

An audio unit must support the noninterleaved 32-bit floating-point linear PCM format to ensure compatibility with units from other vendors. It may also support other linear PCM variants. Currently audio units do not support audio formats other than linear PCM. To convert audio data of a different format to linear PCM, you can use an audio converter (see “Audio Converters and Codecs.”

Note: Audio File and Converter Services uses Component Manager components to handle custom file formats or data conversions. However, these components are not audio units.

Host applications must use Component Manager calls to discover and load audio units. Each audio unit is uniquely identified by a combination of the Component Manager type, subtype, and manufacturer’s code. The type indicates the general purpose of the unit (effect unit, generator unit, and so on). The subtype is an arbitrary value that uniquely identifies an audio unit of a given type by a particular manufacturer. For example, if your company supplies several different effect units, each must have a distinct subtype to distinguish them from each other. Apple defines the standard audio unit types, but you are free to create any subtypes you wish.

Audio units describe their capabilities and configuration information using properties. Properties are key-value pairs that describe non-time varying characteristics, such as the number of channels in an audio unit, the audio data stream format it supports, the sampling rate it accepts, and whether or not the unit supports a custom Cocoa view. Each audio unit type has several required properties, as defined by Apple, but you are free to define additional properties based on your unit’s needs. Host applications can use property information to create user interfaces for a unit, but in many cases, more sophisticated audio units supply their own custom user interfaces.

Audio units also contain various parameters, the types of which depend on the capabilities of the audio unit. Parameters typically represent settings that are adjustable in real time, often by the end user. For example, a parametric filter audio unit may have parameters determining the center frequency and the width of the filter response, which may be set using the user interface. An instrument unit, on the other hand, uses parameters to represent the current state of MIDI or event data.

A signal chain composed of audio units typically ends in an output unit. An output unit often interfaces with hardware (the AUHAL is such an output unit, for example), but this is not a requirement. Output units differ from other audio units in that they are the only units that can start and stop data flow independently. Standard audio units rely on a "pull" mechanism to obtain data. Each audio unit registers a callback with its successor in the audio chain. When an output unit starts the data flow (triggered by the host application), its render function calls back to the previous unit in the chain to ask for data, which in turn calls its predecessor, and so on.

Host applications can combine audio units in an audio processing graph to create larger signal processing modules. Combining units in a processing graph automatically creates the callback links to allow data flow through the chain. See “Audio Processing Graph API” for more information.

To monitor changes in the state of an audio unit, applications can register callbacks ("listeners") that are invoked when particular audio unit events occur. For example, an application might want to know when a parameter changes value or when the data flow is interrupted. See Technical Note TN2104: Handling Audio Unit Events for more details.

The Core Audio SDK (in its AudioUnits folder) provides templates for common audio unit types (for example, effect units and instrument units) along with a C++ framework that implements most of the Component Manager plug-in interface for you.

For more detailed information about building audio units using the SDK, see the Audio Unit Programming Guide.

Audio Processing Graph API

The Audio Processing Graph API lets audio unit host application developers create and manipulate audio processing graphs. The Audio Processing Graph API consists of the functions, data types, and constants defined in the header file AUGraph.h in AudioToolbox.framework.

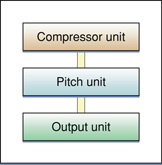

An audio processing graph (sometimes called an AUGraph) defines a collection of audio units strung together to perform a particular task. This arrangement lets you create modules of common processing tasks that you can easily add to and remove from your signal chain. For example, a graph could connect several audio units together to distort a signal, compress it, and then pan it to a particular location in the soundstage. You can end a graph with the AUHAL to transmit the sound to a hardware device (such as an amplifier/speaker). Audio processing graphs are useful for applications that primarily handle signal processing by connecting audio units rather than implementing the processing themselves. Figure 2-1 shows a simple audio processing graph.

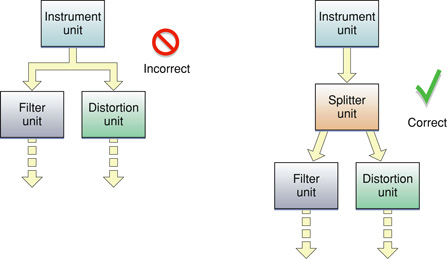

Each audio unit in an audio processing graph is called a node. You make a connection by attaching an output from one node to the input of another. You can't connect an output from one audio unit to more than one audio unit input unless you use a splitter unit, as shown in Figure 2-2. However, an audio unit may contain multiple outputs or inputs, depending on its type.

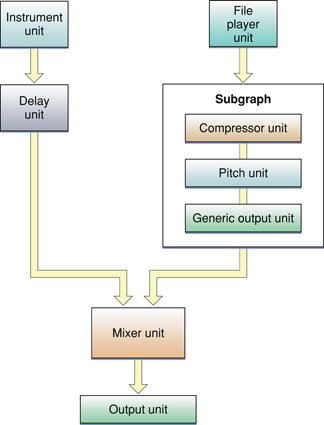

You can use the Audio Processing Graph API to combine subgraphs into a larger graph, where a subgraph appears as a single node in the larger graph, as shown in Figure 2-3.

Each graph or subgraph must end in an output audio unit. In the case of a subgraph, the signal path should end with the generic output unit, which does not connect to any hardware.

While it is possible to link audio units programmatically without using an audio processing graph, you can modify a graph dynamically, allowing you to change the signal path while processing audio data. In addition, because a graph represents simply an interconnection of audio units, you can create and modify a graph without having to instantiate the audio units it references.

Audio File and Converter Services

Audio File and Converter Services lets you read or write audio data, either to a file or to a buffer, and allows you to convert the data between any number of different formats. This service consists of the functions, data types, and constants defined in the following header files in AudioToolbox.framework and AudioUnit.framework:

ExtendedAudioFile.hAudioFile.hAudioFormat.hAudioConverter.hAudioCodec.h(located inAudioUnit.framework).CAFFile.h

In many cases, you use the Extended Audio File API, which provides the simplest interface for reading and writing audio data. Files read using this API are automatically uncompressed and/or converted into linear PCM format, which is the native format for audio units. Similarly, you can use one function call to write linear PCM audio data to a file in a compressed or converted format. “Supported Audio File and Data Formats” lists the file formats that Core Audio supports by default. Some formats have restrictions; for example, by default, Core Audio can read, but not write, MP3 files, and an AC-3 file can be decoded only to a stereo data stream (not 5.1 surround).

If you need more control over the file reading, file writing, or data conversion process, you can access the Audio File and Audio Converter APIs directly (in AudioFile.h and AudioConverter.h). When using the Audio File API, the audio data source (as represented by an audio file object) can be either an actual file or a buffer in memory. In addition, if your application reads and writes proprietary file formats, you can handle the format translation using custom Component Manager components that the Audio File API can discover and load. For example, if your file format incorporates DRM, you would want to create a custom component to handle that process.

Audio Converters and Codecs

An audio converter lets you convert audio data from one format to another. For example, you can make simple conversions such as changing the sample rate and interleaving or deinterleaving audio data streams, to more complex operations such as compressing or decompressing audio. Three types of conversions are possible:

Decoding an audio format (such as AAC (Advanced Audio Coding)) to linear PCM format.

Encoding linear PCM data into a different audio format.

Translating between different variants of linear PCM (for example, converting 16-bit signed integer linear PCM to 32-bit floating point linear PCM).

The Audio Converter API lets you create and manipulate audio converters. You can use the API with many built-in converters to handle most common audio formats. You can instantiate more than one converter at a time, and specify the converter to use when calling a conversion function. Each audio converter has properties that describe characteristics of the converter. For example, a channel mapping property also allows you to specify how the input channels should map to the output channels.

You convert data by calling a conversion function with a particular converter instance, specifying where to find the input data and where to write the output. Most conversions require a callback function to periodically supply input data to the converter.

An audio codec is a Component Manager component loaded by an audio converter to encode or decode a specific audio format. Typically a codec would decode to or encode from linear PCM. The Audio Codec API provides the Component Manager interface necessary for implementing an audio codec. After you create a custom codec, then you can use an audio converter to access it. “Supported Audio File and Data Formats” lists standard Core Audio codecs for translating between compressed formats and Linear PCM.

For examples of how to use audio converters, see SimpleSDK/ConvertFile and the AFConvert command-line tool in Services/AudioFileTools in the Core Audio SDK.

File Format Information

In addition to reading, writing, and conversion, Audio File and Converter Services can obtain useful information about file types and the audio data a file contains. For example, you can obtain data such as the following using the Audio File API:

File types that Core Audio can read or write

Data formats that the Audio File API can read or write

The name of a given file type

The file extension(s) for a given file type

The Audio File API also allows you to set or read properties associated with a file. Examples of properties include the data format stored in the file and a CFDictionary containing metadata such as the genre, artist, and copyright information.

Audio Metadata

When handling audio data, you often need specific information about the data so you know how to best process it. The Audio Format API (in AudioFormat.h) allows you to query information stored in various audio structures. For example, you might want to know some of the following characteristics:

Information associated with a particular channel layout (number of channels, channel names, input to output mapping).

Panning matrix information, which you can use for mapping between channel layouts.

Sampling rate, bit rate, and other basic information.

In addition to this information, you can also use the Audio Format API to obtain specific information about the system related to Core Audio, such as the audio codecs that are currently available.

Core Audio File Format

Although technically not a part of the Core Audio programming interface, the Core Audio File format (CAF) is a powerful and flexible file format , defined by Apple, for storing audio data. CAF files have no size restrictions (unlike AIFF, AIFF-C, and WAVE files) and can support a wide range of metadata, such as channel information and text annotations. The CAF format is flexible enough to contain any audio data format, even formats that do not exist yet. For detailed information about the Core Audio File format, see Apple Core Audio Format Specification 1.0.

Hardware Abstraction Layer (HAL) Services

Core Audio uses a hardware abstraction layer (HAL) to provide a consistent and predictable interface for applications to deal with hardware. Each piece of hardware is represented by an audio device object (type AudioDevice) in the HAL. Applications can query the audio device object to obtain timing information that can be used for synchronization or to adjust for latency.

HAL Services consists of the functions, data types, and constants defined in the following header files in CoreAudio.framework:

AudioDriverPlugin.hAudioHardware.hAudioHardwarePlugin.hCoreAudioTypes.h(Contains data types and constants used by all Core Audio interfaces)HostTime.h

Most developers will find that Apple’s AUHAL unit serves their hardware interface needs, so they don’t have to interact directly with the HAL Services. The AUHAL is responsible for transmitting audio data, including any required channel mapping, to the specified audio device object. For more information about using the AUHAL and output units, see “Interfacing with Hardware Devices.”

Music Player API

The Music Player API allows you to arrange and play a collection of music tracks. It consists of the functions, data types, and constants defined in the header file MusicPlayer.h in AudioToolbox.framework.

A particular stream of MIDI or event data is a track (represented by the MusicTrack type). Tracks contain a series of time-based events, which can be MIDI data, Core Audio event data, or your own custom event messages. A collection of tracks is a sequence (type MusicSequence). A sequence always contains an additional tempo track, which synchronizes the playback of all tracks in the sequence. Your application can add, delete, or edit tracks in a sequence dynamically. Each sequence must be assigned to a corresponding music player object (type MusicPlayer), which acts as the overall controller for all the tracks in the sequence.

A track is analogous to sheet music for an instrument, indicating which notes to play and for how long. A sequence is similar to a musical score, which contains notes for multiple instruments. Instrument units or external MIDI devices represent the musical instruments, while the music player is similar to the conductor who keeps all the musicians coordinated.

Track data played by a music player can be sent to an audio processing graph, an external MIDI device, or a combination of the two. The audio processing graph receives the track data through one or more instrument units, which convert the event (or MIDI) data into actual audio signals. The music player automatically communicates with the graph's output audio unit or Core MIDI to ensure that the audio output is properly synchronized.

Track data does not have to represent musical information. For example, special Core Audio events can represent changes in audio unit parameter values. A track assigned to a panner audio unit might send parameter events to alter the position of a sound source in the soundstage over time. Tracks can also contain proprietary user events that trigger an application-defined callback.

For more information about using the Music Player API to play MIDI data, see “Handling MIDI Data.”

Core MIDI Services and MIDI Server Services

Core Audio uses Core MIDI Services for MIDI support. These services consist of the functions, data types, and constants defined in the following header files in CoreMIDI.framework:

MIDIServices.hMIDISetup.hMIDIThruConnection.hMIDIDriver.h

Core MIDI Services defines an interface that applications and audio units can use to communicate with MIDI devices. It uses a number of abstractions that allow an application to interact with a MIDI network.

A MIDI endpoint (defined by an opaque type MIDIEndpointRef) represents a source or destination for a standard 16-channel MIDI data stream, and it is the primary conduit for interacting with Core Audio services. For example, you can associate endpoints with tracks used by the Music Player API, allowing you to record or play back MIDI data. A MIDI endpoint is a logical representation of a standard MIDI cable connection. MIDI endpoints do not necessarily have to correspond to a physical device, however; an application can set itself up as a virtual source or destination to send or receive MIDI data.

MIDI drivers often combine multiple endpoints into logical groups, called MIDI entities (MIDIEntityRef). For example, it would be reasonable to group a MIDI-in endpoint and a MIDI-out endpoint as a MIDI entity, which can then be easily referenced for bidirectional communication with a device or application.

Each physical MIDI device (not a single MIDI connection) is represented by a Core MIDI device object (MIDIDeviceRef). Each device object may contain one or more MIDI entities.

Core MIDI communicates with the MIDI Server, which does the actual job of passing MIDI data between applications and devices. The MIDI Server runs in its own process, independent of any application. Figure 2-4 shows the relationship between Core MIDI and MIDI Server.

In addition to providing an application-agnostic base for MIDI communications, MIDI Server also handles any MIDI thru connections, which allows device-to device chaining without involving the host application.

If you are a MIDI device manufacturer, you may need to supply a CFPlugin plug-in for the MIDI Server packaged in a CFBundle to interact with the kernel-level I/O Kit drivers. Figure 2-5 shows how Core MIDI and Core MIDI Server interact with the underlying hardware.

Note: If you create a USB MIDI class-compliant device, you do not have to write your own driver, because Apple’s supplied USB driver will support your hardware.

The drivers for each MIDI device generally exist outside the kernel, running in the MIDI Server process. These drivers interact with the default I/O Kit drivers for the underlying protocols (such as USB and FireWire). The MIDI drivers are responsible for presenting the raw device data to Core MIDI in a usable format. Core MIDI then passes the MIDI information to your application through the designated MIDI endpoints, which are the abstract representations of the MIDI ports on the external devices.

MIDI devices on PCI cards, however, cannot be controlled entirely through a user-space driver. For PCI cards, you must create a kernel extension to provide a custom user client. This client must either control the PCI device itself (providing a simple message queue for the user-space driver) or map the address range of the PCI device into the address of the MIDI server when requested to do so by the user-space driver. Doing so allows the user-space driver to control the PCI device directly.

For an example of implementing a user-space MIDI driver, see MIDI/SampleUSBDriver in the Core Audio SDK.

Core Audio Clock API

The Core Audio Clock API, as defined in the header file CoreAudioClock.h in AudioToolbox.framework, provides a reference clock that you can use to synchronize applications or devices. This clock may be a standalone timing source, or it can be synchronized with an external trigger, such as a MIDI beat clock or MIDI time code. You can start and stop the clock yourself, or you can set the clock to activate or deactivate in response to certain events.

You can obtain the generated clock time in a number of formats, including seconds, beats, SMPTE time, audio sample time, and bar-beat time. The latter describes the time in a manner that is easy to display onscreen in terms of musical bars, beats, and subbeats. The Core Audio Clock API also contains utility functions that convert one time format to another and that display bar-beat or SMPTE times. Figure 2-6 shows the interrelationship between various Core Audio Clock formats.

The hardware times represent absolute time values from either the host time (the system clock) or an audio time obtained from an external audio device (represented by an AudioDevice object in the HAL). You determine the current host time by calling mach_absolute_time or UpTime. The audio time is the audio device’s current time represented by a sample number. The sample number’s rate of change depends on the audio device’s sampling rate.

The media times represent common timing methods for audio data. The canonical representation is in seconds, expressed as a double-precision floating point value. However, you can use a tempo map to translate seconds into musical bar-beat time, or apply a SMPTE offset to convert seconds to SMPTE seconds.

Media times do not have to correspond to real time. For example, an audio file that is 10 seconds long will take only 5 seconds to play if you double the playback rate. The knob in Figure 2-6 indicates that you can adjust the correlation between the absolute (“real”) times and the media-based times. For example, bar-beat notation indicates the rhythm of a musical line and what notes to play when, but does not indicate how long it takes to play. To determine that, you need to know the playback rate (say, in beats per second). Similarly, the correspondence of SMPTE time to actual time depends on such factors as the frame rate and whether frames are dropped or not.

OpenAL (Open Audio Library)

Core Audio includes a Mac OS X implementation of the open-source OpenAL specification. OpenAL is a cross-platform API used to position and manipulate sounds in a simulated three-dimensional space. For example, you can use OpenAL for positioning and moving sound effects in a game, or creating a sound space for multichannel audio. In addition to simple positioning sound around a listener, you can also add distancing effects through a medium (such as fog or water), doppler effects, and so on.

The OpenAL coding conventions and syntax were designed to mimic OpenGL (only controlling sound rather than light), so OpenGL programmers should find many concepts familiar.

For an example of using OpenAL in Core Audio, see Services/OpenALExample in the Core Audio SDK. For more details about OpenAL, including programming information and API references, see openal.org.

System Sound API

The System Sound API provides a simple way to play standard system sounds in your application. Its header, SystemSound.h is the only Core Audio header located in a non-Core Audio framework. It is located in the CoreServices/OSServices framework.

For more details about using the System Sound API, see Technical Note 2102: The System Sound APIs for Mac OS X v10.2, 10.3, and Later.

© 2007 Apple Inc. All Rights Reserved. (Last updated: 2007-01-08)