Media Data Atom Types

QuickTime uses atoms of different types to store different types of media data—video media atoms for video data, sound media atoms for audio data, and so on. This chapter discusses in detail each of these different media data atom types.

If you are a QuickTime application or tool developer, you’ll want to read this chapter in order to understand the fundamentals of how QuickTime uses atoms for storage of different media data. For the latest updates and postings, be sure to see Apple's QuickTime developer website.

This chapter is divided into the following major sections:

“Video Media” describes video media, which is used to store compressed and uncompressed image data in QuickTime movies.

“Sound Media” discusses sound media used to store compressed and uncompressed audio data in QuickTime movies.

“Timecode Media” describes time code media used to store time code data in QuickTime movies.

“Text Media” discusses text media used to store text data in QuickTime movies.

“Music Media” discusses music media used to store note-based audio data, such as MIDI data, in QuickTime movies.

“MPEG-1 Media” discusses MPEG-1 media used to store MPEG-1 video and MPEG-1 multiplexed audio/video streams in QuickTime movies.

“Sprite Media” discusses sprite media used to store character-based animation data in QuickTime movies.

“Tween Media” discusses tween media used to store pairs of values to be interpolated between in QuickTime movies.

“Modifier Tracks” discusses the capabilities of modifier tracks.

“Track References” describes a feature of QuickTime that allows you to relate a movie’s tracks to one another.

“3D Media” discusses briefly how QuickTime movies store 3D image data in a base media.

“Hint Media” describes the additions to the QuickTime file format for streaming QuickTime movies over the Internet.

“VR Media” describes the QuickTime VR world and node information atom containers, as well as cubic panoramas, which are new to QuickTime VR 3.0.

“Movie Media” discusses movie media which is used to encapsulate embedded movies within QuickTime movies.

Video Media

Video

media is used to store compressed and uncompressed image data in

QuickTime movies. It has a media type of 'vide'.

Video Sample Description

The video sample description contains information that defines how to interpret video media data. A video sample description begins with the four fields described in “General Structure of a Sample Description.”

The data format field of a video sample description indicates the type of compression that was used to compress the image data, or the color space representation of uncompressed video data. Table 3-1 shows some of the formats supported. The list is not exhaustive, and is subject to addition.

Compression type |

Description |

|---|---|

Cinepak |

|

JPEG |

|

Graphics |

|

Animation |

|

Apple video |

|

Kodak Photo CD |

|

|

Portable Network Graphics |

Motion-JPEG (format A) |

|

Motion-JPEG (format B) |

|

Sorenson video, version 1 |

|

|

Sorenson video 3 |

|

MPEG-4 video |

|

NTSC DV-25 video |

|

PAL DV-25 video |

|

Compuserve Graphics Interchange Format |

|

H.263 video |

|

Tagged Image File Format |

Uncompressed RGB |

|

|

Uncompressed Y′CbCr, 8-bit-per-component 4:2:2 |

|

Uncompressed Y′CbCr, 8-bit-per-component 4:2:2 |

|

Uncompressed Y′CbCr, 8-bit-per-component 4:4:4 |

|

Uncompressed Y′CbCr, 8-bit-per-component 4:4:4:4 |

|

Uncompressed Y′CbCr, 10, 12, 14, or 16-bit-per-component 4:2:2 |

|

Uncompressed Y′CbCr, 10-bit-per-component 4:4:4 |

|

Uncompressed Y′CbCr, 10-bit-per-component 4:2:2 |

The video media sample description adds the following fields to the general sample description.

- Version

A 16-bit integer indicating the version number of the compressed data. This is set to 0, unless a compressor has changed its data format.

- Revision level

A 16-bit integer that must be set to 0.

- Vendor

A 32-bit integer that specifies the developer of the compressor that generated the compressed data. Often this field contains '

appl' to indicate Apple Computer, Inc.- Temporal quality

A 32-bit integer containing a value from 0 to 1023 indicating the degree of temporal compression.

- Spatial quality

A 32-bit integer containing a value from 0 to 1024 indicating the degree of spatial compression.

- Width

A 16-bit integer that specifies the width of the source image in pixels.

- Height

A 16-bit integer that specifies the height of the source image in pixels.

- Horizontal resolution

A 32-bit fixed-point number containing the horizontal resolution of the image in pixels per inch.

- Vertical resolution

A 32-bit fixed-point number containing the vertical resolution of the image in pixels per inch.

- Data size

A 32-bit integer that must be set to 0.

- Frame count

A 16-bit integer that indicates how many frames of compressed data are stored in each sample. Usually set to 1.

- Compressor name

A 32-byte Pascal string containing the name of the compressor that created the image, such as

"jpeg".- Depth

A 16-bit integer that indicates the pixel depth of the compressed image. Values of 1, 2, 4, 8 ,16, 24, and 32 indicate the depth of color images. The value 32 should be used only if the image contains an alpha channel. Values of 34, 36, and 40 indicate 2-, 4-, and 8-bit grayscale, respectively, for grayscale images.

- Color table ID

A 16-bit integer that identifies which color table to use. If this field is set to –1, the default color table should be used for the specified depth. For all depths below 16 bits per pixel, this indicates a standard Macintosh color table for the specified depth. Depths of 16, 24, and 32 have no color table.

If the color table ID is set to 0, a color table is contained within the sample description itself. The color table immediately follows the color table ID field in the sample description. See “Color Table Atoms” for a complete description of a color table.

Video Sample Description Extensions

Video sample descriptions can be extended by appending other atoms. These atoms are placed after the color table, if one is present. These extensions to the sample description may contain display hints for the decompressor or may simply carry additional information associated with the images. Table 3-2 lists the currently defined extensions to video sample descriptions.

Extension type |

Description |

|---|---|

A 32-bit fixed-point number indicating the gamma level at which the image was captured. The decompressor can use this value to gamma-correct at display time. |

|

Two 8-bit integers that define field handling. This information is used by applications to modify decompressed image data or by decompressor components to determine field display order. This extension is mandatory for all uncompressed Y′CbCr data formats.The first byte specifies the field count, and may be set to 1 or 2. A value of 1 is used for progressive-scan images; a value of 2 indicates interlaced images. When the field count is 2, the second byte specifies the field ordering: which field contains the topmost scan-line, which field should be displayed earliest, and which is stored first in each sample. Each sample consists of two distinct compressed images, each coding one field: the field with the topmost scan-line, T, and the other field, B. The following defines the permitted variants:0 – There is only one field. 1 – T is displayed earliest, T is stored first in the file. 6 – B is displayed earliest, B is stored first in the file.9 – B is displayed earliest, T is stored first in the file.14 – T is displayed earliest, B is stored first in the file. |

|

The default quantization table for a Motion-JPEG data stream. |

|

The default Huffman table for a Motion-JPEG data stream. |

|

|

An MPEG-4 elementary stream descriptor atom. This extension is required for MPEG-4 video. For details, see “MPEG-4 Elementary Stream Descriptor ('esds') Atom.” |

|

Pixel aspect ratio. This extension is mandatory for video formats that use non-square pixels. For details, see “Pixel Aspect Ratio ('pasp').” |

|

Color parameters—an image description extension required for all uncompressed Y′CbCr video types. For details, see “Color Parameter Atoms ('colr').” |

|

Clean aperture—spatial relationship of Y′CbCr components relative to a canonical image center. This allows accurate alignment for compositing of video images captured using different systems. This is a mandatory extension for all uncompressed Y′CbCr data formats. For details, see “Clean Aperture ('clap').” |

Pixel Aspect Ratio ('pasp')

This extension specifies the height-to-width ratio of pixels found in the video sample. This is a required extension for MPEG-4 and uncompressed Y′CbCr video formats when non-square pixels are used. It is optional when square pixels are used.

- Size

An unsigned 32-bit integer holding the size of the pixel aspect ratio atom.

- Type

An unsigned 32-bit field containing the four-character code

'pasp'.- hSpacing

An unsigned 32-bit integer specifying the horizontal spacing of pixels, such as luma sampling instants for Y′CbCr or YUV video.

- vSpacing

An unsigned 32-bit integer specifying the vertical spacing of pixels, such as video picture lines.

The units of measure for the hSpacing and vSpacing parameters

are not specified, as only the ratio matters. The units of measure

for height and width must be the same, however.

Description |

hSpacing |

vSpacing |

|---|---|---|

4:3 square pixels (composite NTSC or PAL) |

1 |

1 |

4:3 non-square 525 (NTSC) |

10 |

11 |

4:3 non-square 625 (PAL) |

59 |

54 |

16:9 analog (composite NTSC or PAL) |

4 |

3 |

16:9 digital 525 (NTSC) |

40 |

33 |

16:9 digital 625 (PAL) |

118 |

81 |

1920x1035 HDTV (per SMPTE 260M-1992) |

113 |

118 |

1920x1035 HDTV (per SMPTE RP 187-1995) |

1018 |

1062 |

1920x1080 HDTV or 1280x720 HDTV |

1 |

1 |

MPEG-4 Elementary Stream Descriptor Atom ('esds')

This atom contains an MPEG-4 elementary stream descriptor atom. This

is a required extension to the video sample description for MPEG-4

video. This extension appears in video sample descriptions only

when the codec type is 'mp4v'.

Note: The elementary stream descriptor which this atom contains is defined in the MPEG-4 specification ISO/IEC FDIS 14496-1.

- Size

An unsigned 32-bit integer holding the size of the elementary stream descriptor atom.

- Type

An unsigned 32-bit field containing the four-character code

'esds'- Version

An unsigned 8-bit integer set to zero.

- Flags

A 24-bit field reserved for flags, currently set to zero.

- Elementary Stream Descriptor

An elementary stream descriptor for MPEG-4 video, as defined in the MPEG-4 specification ISO/IEC 14496-1 and subject to the restrictions for storage in MPEG-4 files specified in ISO/IEC 14496-14.

Color Parameter Atoms ('colr')

This atom is a required extension for uncompressed Y′CbCr

data formats. The 'colr' extension

is used to map the numerical values of pixels in the file to a common representation

of color in which images can be correctly compared, combined, and displayed.

The common representation is the CIE XYZ tristimulus values (defined

in Publication CIE No. 15.2).

Use of a common representation also allows you to correctly map between Y′CbCr and RGB color spaces and to correctly compensate for gamma on different systems.

The 'colr' extension supersedes

the previously defined 'gama' Image

Description extension. Writers of QuickTime files should never write

both into an Image Description, and readers of QuickTime files should

ignore 'gama' if 'colr' is

present.

The 'colr' extension is designed

to work for multiple imaging applications such as video and print.

Each application, driven by its own set of historical and economic

realities, has its own set of parameters needed to map from pixel

values to CIE XYZ.

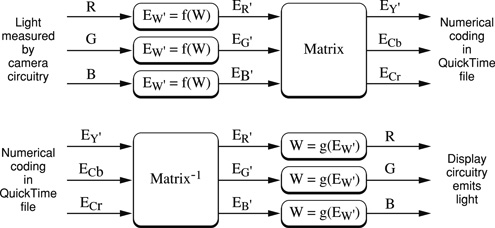

The CIE XYZ representation is mapped to various stored Y′CbCr formats using a common set of transfer functions and matrixes. The transfer function coefficients and matrix values are stored as indexes into a table of canonical references. This provides support for multiple video systems while limiting the scope of possible values to a set of recognized standards.

The 'colr' atom contains four

fields: a color parameter type and three indexes. The indexes are

to a table of primaries, a table of transfer function coefficients,

and a table of matrixes.

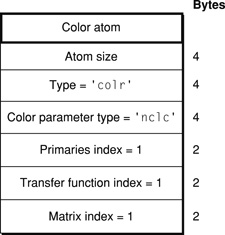

The table of matrixes specifies the matrix used during the translation, as shown in Figure 3-2.

- Color parameter type

A 32-bit field containing a four-character code for the color parameter type. The currently defined types are

'nclc'for video, and'prof'for print. The color parameter type distinguishes between print and video mappings.If the color parameter type is

'prof', then this field is followed by an ICC profile. This is the color model used by Apple’s ColorSync. The contents of this type are not defined in this document. Contact Apple Computer for more information on the'prof'type'colr'extension.If the color parameter type is

'nclc'then this atom contains the following fields:- Primaries index

A 16-bit unsigned integer containing an index into a table specifying the CIE 1931 xy chromaticity coordinates of the white point and the red, green, and blue primaries. The table of primaries specifies the white point and the red, green, and blue primary color points for a video system.

- Transfer function index

A 16-bit unsigned integer containing an index into a table specifying the nonlinear transfer function coefficients used to translate between RGB color space values and Y′CbCr values. The table of transfer function coefficients specifies the nonlinear function coefficients used to translate between the stored Y′CbCr values and a video capture or display system, as shown in Figure 3-2.

- Matrix index

A 16-bit unsigned integer containing an index into a table specifying the transformation matrix coefficients used to translate between RGB color space values and Y′CbCr values. The table of matrixes specifies the matrix used during the translation, as shown in Figure 3-2.

The transfer function and matrix are used as shown in the following diagram.

The Y′CbCr values stored in a file are normalized to a range of [0,1]for Y′ and [-0.5, +0.5] for Cb and Cr when performing these operations. The normalized values are then scaled to the proper bit depth for a particular Y′CbCr format before storage in the file.

Note: The symbols used for these values are not intended to correspond to the use of these same symbols in other standards. In particular, "E" should not be interpreted as voltage.

These normalized values can be mapped onto the stored integer values of a particular compression type's Y′, Cb, and Cr components using two different schemes, which we will call Scheme A and Scheme B.

Warning: Other, slightly different encoding/mapping schemes exist in the video industry, and data encoded using these schemes must be converted to one of the QuickTime schemes defined here.

Scheme A uses "Wide-Range" mapping (full scale) with unsigned Y′ and twos-complement Cb and Cr values.

This maps normalized values to stored values so that, for example, 8-bit unsigned values for Y′ go from 0-255 as the normalized value goes from 0 to 1, and 8-bit signed valued for Cb and Cr go from -127 to +127 as the normalized values go from -0.5 to +0.5.

Warning: In specifications such as ITU-R BT.601-4, JFIF 1.02, and SPIFF (Rec. ITU-T T.84), the symbols Cb and Cr are used to describe offset binary integers, not twos-complement signed integers shown here.

Scheme B uses "Video-Range" mapping with unsigned Y′ and offset binary Cb and Cr values.

Note: Scheme B comes from digital video industry specifications such as Rec. ITU-R BT. 601-4. All standard digital video tape formats (e.g., SMPTE D-1, SMPTE D-5) and all standard digital video links (e.g., SMPTE 259M-1997 serial digital video) use this scheme. Professional video storage and processing equipment from vendors such as Abekas, Accom, and SGI also use this scheme. MPEG-2, DVC and many other codecs specify source Y′CbCr pixels using this scheme.

This maps the normalized values to stored values so that, for example, 8-bit unsigned values for Y′ go from 16–235 as the normalized value goes from 0 to1, and 8-bit unsigned valued for Cb and Cr go from 16–240 as the normalized values go from -0.5 to +0.5.

For 10-bit samples, Y′ has a range of 64 to 940 as the normalized value goes from 0 to 1, and Cb and Cr have the range of 65–960 as the normalized values go from –0.5 to +0.5.

Y′ is an unsigned integer. Cb and Cr are offset binary integers.

Certain Y′, Cb, and Cr component values v are reserved as synchronization signals and must not appear in a buffer. For n = 8 bits, these are values 0 and 255. For n = 10 bits, these are values 0, 1, 2, 3, 1020, 1021, 1022, and 1023. The writer of a QuickTime image is responsible for omitting these values. The reader of a QuickTime image may assume that they are not present.

The remaining component values that fall outside the mapping for scheme B (1-15 and 241-254 for n = 8 bits and 4–63 and 961–1019 for n = 10 bits) accommodate occasional filter undershoot and overshoot in image processing. In some applications, these values are used to carry other information (e.g., transparency). The writer of a QuickTime image may use these values and the reader of a QuickTime image must expect these values.

The following tables show the primary values, transfer functions,

and matrixes indicated by the index entries in the 'colr' atom.

The R, G, and B values below are tristimulus values (such as candelas/meter^2), whose relationship to CIE XYZ values can be derived from the primaries and white point specified in the table, using the method described in SMPTE RP 177-1993. In this instance, the R, G, and B values are normalized to the range [0,1].

Index |

Values |

|---|---|

0 |

Reserved |

1 |

Recommendation ITU-R BT.709-2, SMPTE 274M-1995, and SMPTE 296M-1997 white x = 0.3127 y = 0.3290 (CIE III. D65) red x=0.640 y = 0.330 green x = 0.300 y = 0.600 blue x = 0.150 y = 0.060 |

2 |

Primary values are unknown |

3–4 |

Reserved |

5 |

SMPTE RP 145-1993, SMPTE170M-1994, 293M-1996, 240M-1995, and SMPTE 274M-1995 white x = 0.3127 y = 0.3290 (CIE III. D65) red x = 0.64 y = 0.33 green x = 0.29 y = 0.60 blue x = 0.15 y = 0.06 |

6 |

ITU-R BT.709-2, SMPTE 274M-1995, and SMPTE 296M-1997 white x = 0.3127 y = 0.3290 (CIE III. D65) red x = 0.630 y = 0.340 green x = 0.310 y = 0.595 blue x = 0.155 y = 0.070 |

7–65535 |

Reserved |

The transfer functions below are used as shown in Figure 3-2.

Index |

Video Standards |

|---|---|

0 |

Reserved |

1 |

Recommendation ITU-R BT.709-2, SMPTE 274M-1995, 296M-1997, 293M-1996, 170M-1994 See below for transfer function equations. |

2 |

Coefficient values are unknown |

3–6 |

Reserved |

7 |

Recommendation SMPTE 240M-1995 and 274M-1995 See below for transfer function equations. |

8–65535 |

Reserved |

The MPEG-2 sequence display extension transfer_characteristics defines

a code 6 whose transfer function is identical to that in code 1.

QuickTime writers should map 6 to 1 when converting from transfer_characteristics to transferFunction.

Recommendation ITU-R BT.470-4 specified an "assumed gamma value of the receiver for which the primary signals are pre-corrected" as 2.2 for NTSC and 2.8 for PAL systems. This information is both incomplete and obsolete. Modern 525- and 625-line digital and NTSC/PAL systems use the transfer function with code 1 below.

The matrix values are shown in Table 3-6 and in Figure 3-8, Figure 3-9, and Figure 3-10. These figures show a formula for obtaining the normalized value of Y′ in the range [0,1]. You can derive the formula for normalized values of Cb and Cr as follows:

If the equation for normalized Y′ has the form:

![]()

Then the formulas for normalized Cb and Cr are:

![]()

Index |

Video Standard |

|---|---|

0 |

Reserved |

1 |

Recommendation ITU-R BT.709-2 (1125/60/2:1 only), SMPTE 274M-1995, 296M-1997 See below for matrix values. |

2 |

Coefficient values are unknown |

3–5 |

Reserved |

6 |

Recommendation ITU-R BT.601-4 and BT.470-4 System B and G, SMPTE 170M-1994, 293M-1996 See below for matrix values |

7 |

SMPTE 240M-1995, 274M-1995 See below for matrix values |

8–65535 |

Reserved |

Clean Aperture ('clap')

The clean aperture extension defines the relationship between the pixels in a stored image and a canonical rectangular region of a video system from which it was captured or to which it will be displayed. This can be used to correlate pixel locations in two or more images—possibly recorded using different systems—for accurate compositing. This is necessary because different video digitizer devices can digitize different regions of the incoming video signal, causing pixel misalignment between images. In particular, a stored image may contain “edge” data outside the canonical display area for a given system.

The clean aperture is either coincident with the stored image or a subset of the stored image; if it is a subset, it may be centered on the stored image, or it may be offset positively or negatively from the stored image center.

The clean aperture extension contains a width in pixels, a height in picture lines, and a horizontal and vertical offset between the stored image center and a canonical image center for the given video system. The width is typically the width of the canonical clean aperture for a video system divided by the pixel aspect ratio of the stored data. The offsets also take into account any “overscan” in the stored image. The height and width must be positive values, but the offsets may be positive, negative, or zero.

These values are given as ratios of two 32-bit numbers, so that applications can calculate precise values with minimum roundoff error. For whole values, the value should be stored in the numerator field while the denominator field is set to 1.

- Size

A 32-bit unsigned integer containing the size of the

'clap'atom.- Type

A 32-bit unsigned integer containing the four-character code

'clap'.- apertureWidth_N (numerator)

A 32-bit signed integer containing either the width of the clean aperture in pixels or the numerator portion of a fractional width.

- apertureWidth_D (denominator)

A 32-bit signed integer containing either the denominator portion of a fractional width or the number 1.

- apertureHeight_N (numerator)

A 32-bit signed integer containing either the height of the clean aperture in picture lines or the numerator portion of a fractional height.

- apertureHeight_D (denominator)

A 32-bit signed integer containing either the denominator portion of a fractional height or the number 1.

- horizOff_N (numerator)

A 32-bit signed integer containing either the horizontal offset of the clean aperture center minus (width–1)/2 or the numerator portion of a fractional offset. This value is typically zero.

- horizOff_D (denominator)

A 32-bit signed integer containing either the denominator portion of the horizontal offset or the number 1.

- vertOff_N (numerator)

A 32-bit signed integer containing either the vertical offset of the clean aperture center minus (height–1)/2 or the numerator portion of a fractional offset. This value is typically zero.

- vertOff_D (denominator)

A 32-bit signed integer containing either the denominator portion of the vertical offset or the number 1.

Video Sample Data

The format of the data stored in video samples is completely dependent on the type of the compression used, as indicated in the video sample description. The following sections discuss some of the video encoding schemes supported by QuickTime.

Uncompressed RGB

Uncompressed RGB data is stored in a variety of different formats. The format used depends on the depth field of the video sample description. For all depths, the image data is padded on each scan line to ensure that each scan line begins on an even byte boundary.

For depths of 1, 2, 4, and 8, the values stored are indexes into the color table specified in the color table ID field.

For a depth of 16, the pixels are stored as 5-5-5 RGB values with the high bit of each 16-bit integer set to 0.

For a depth of 24, the pixels are stored packed together in RGB order.

For a depth of 32, the pixels are stored with an 8-bit alpha channel, followed by 8-bit RGB components.

RGB data can be stored in composite or planar format. Composite format stores the RGB data for each pixel contiguously, while planar format stores the R, G, and B data separately, so the RGB information for a given pixel is found using the same offset into multiple tables. For example, the data for two pixels could be represented in composite format as RGB-RGB or in planar format as RR-GG-BB.

Uncompressed Y′CbCr (including yuv2)

The Y′CbCr color space is widely used for digital video. In this data format, luminance is stored as a single value (Y), and chrominance information is stored as two color-difference components (Cb and Cr). Cb is the difference between the blue component and a reference value; Cr is the difference between the red component and a reference value.

This is commonly referred to as “YUV” format, with “U” standing-in for Cb and “V” standing-in for Cr. This usage is not strictly correct, as YUV, YIC, and Y′CbCr are distinct color models for PAL, NTSC, and digital video, but most Y′CbCr data formats and codecs are described or even named as some variant of “YUV.”

The values of Y, Cb, and Cr can be represented using a variety of bit depths, trading off accuracy for file size. Similarly, the chrominance values can be sub-sampled, recording only one pixel’s color value out of two, for example, or averaging the color value of adjacent pixels. This sub-sampling is a form of compression, but if no additional lossy compression is performed on the sampled video, it is still referred to as “uncompressed” Y′CbCr video. In addition, a fourth component can be added to Y′CbCr video to record an alpha channel.

The number of components (Y′CbCr with or without alpha) and any sub-sampling are denoted using ratios of three or four numbers, such as 4:2:2 to indicate 4 bits of Y to 2 bits each of Cb and Cr (chroma sub-sampling), or 4:4:4 for equal storage of Y, Cb, and Cr (no sub-sampling), or 4:4:4:4 for Y′CbCr plus alpha with no sub-sampling. The ratios do not typically denote actual bit depths.

Uncompressed Y′CbCr video data is typically stored as follows:

Y′, Cb, and Cr components of each line are stored spatially left to right and temporally from earliest to latest.

The lines of a field or frame are stored spatially top to bottom and temporally earliest to latest.

Y′ is an unsigned integer. Cb and Cr are twos-complement signed integers.

The yuv2 stream, for example, is encoded in a series of 4-byte packets. Each packet represents two adjacent pixels on the same scan line. The bytes within each packet are ordered as follows:

y0 u y1 v |

y0 is the luminance

value for the left pixel; y1 the

luminance for the right pixel. u and v are chromatic

values that are shared by both pixels.

Accurate conversion between RGB and Y′CbCr color spaces requires a computation for each component of each pixel. An example conversion from yuv2 into RGB is represented by the following equations:

r = 1.402 * v + y + .5

g = y - .7143 * v - .3437 * u + .5

b = 1.77 * u + y + .5

The r, g, and b values range from 0 to 255.

The coefficients in these equations are derived from matrix

operations and depend on the reference values used for the primary

colors and for white. QuickTime uses canonical values for these

reference coefficients based on published standards. The sample description

extension for Y′CbCr formats includes a 'colr' atom,

which contains indexes into a table of canonical references. This

provides support for multiple video standards without opening the

door to data entry errors for stored coefficient values. Refer to

the published standards for the formulas and methods used to derive

conversion coefficients from the table entries.

JPEG

QuickTime stores JPEG images according to the rules described in the ISO JPEG specification, document number DIS 10918-1.

MPEG-4 Video

MPEG-4 video uses the 'mp4v' data

format. The sample description requires the elementary stream descriptor

('esds') extension to the standard

video sample description. If non-square pixels are used, the pixel

aspect ratio ('pasp') extension is

also required. For details on these extensions, see “Pixel Aspect Ratio ('pasp')” and “MPEG-4 Elementary Stream Descriptor Atom ('esds').”

MPEG-4 video conforms to ISO/IEC documents 14496-1/2000(E) and 14496-2:1999/Amd.1:2000(E).

Motion-JPEG

Motion-JPEG (M-JPEG) is a variant of the ISO JPEG specification for use with digital video streams. Instead of compressing an entire image into a single bitstream, Motion-JPEG compresses each video field separately, returning the resulting JPEG bitstreams consecutively in a single frame.

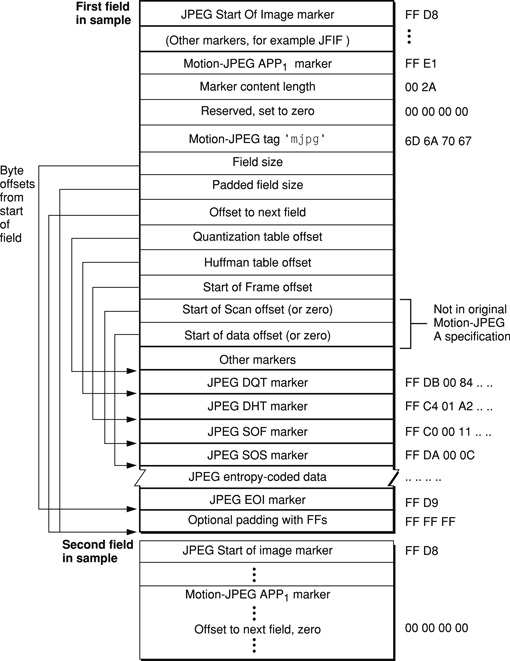

There are two flavors of Motion-JPEG currently in use. These two formats differ based on their use of markers. Motion-JPEG format A supports markers; Motion-JPEG format B does not. The following paragraphs describe how QuickTime stores Motion-JPEG sample data. Figure 3-11 shows an example of Motion-JPEG A dual-field sample data. Figure 3-12 shows an example of Motion- JPEG B dual-field sample data.

Each field of Motion-JPEG format A fully complies with the ISO JPEG specification, and therefore supports application markers. QuickTime uses the APP1 marker to store control information, as follows (all of the fields are 32-bit integers):

- Reserved

Unpredictable; should be set to 0.

- Tag

Identifies the data type; this field must be set to

'mjpg'.- Field size

The actual size of the image data for this field, in bytes.

- Padded field size

Contains the size of the image data, including pad bytes. Some video hardware may append pad bytes to the image data; this field, along with the field size field, allows you to compute how many pad bytes were added.

- Offset to next field

The offset, in bytes, from the start of the field data to the start of the next field in the bitstream. This field should be set to 0 in the last field’s marker data.

- Quantization table offset

The offset, in bytes, from the start of the field data to the quantization table marker. If this field is set to 0, check the image description for a default quantization table.

- Huffman table offset

The offset, in bytes, from the start of the field data to the Huffman table marker. If this field is set to 0, check the image description for a default Huffman table.

- Start of frame offset

The offset from the start of the field data to the start of image marker. This field should never be set to 0.

- Start of scan offset

The offset, in bytes, from the start of the field data to the start of the scan marker. This field should never be set to 0.

- Start of data offset

The offset, in bytes, from the start of the field data to the start of the data stream. Typically, this immediately follows the start of scan data.

Note: The last two fields have been added since the original Motion-JPEG specification, and so they may be missing from some Motion-JPEG A files. You should check the length of the APP1 marker before using the start of scan offset and start of data offset fields.

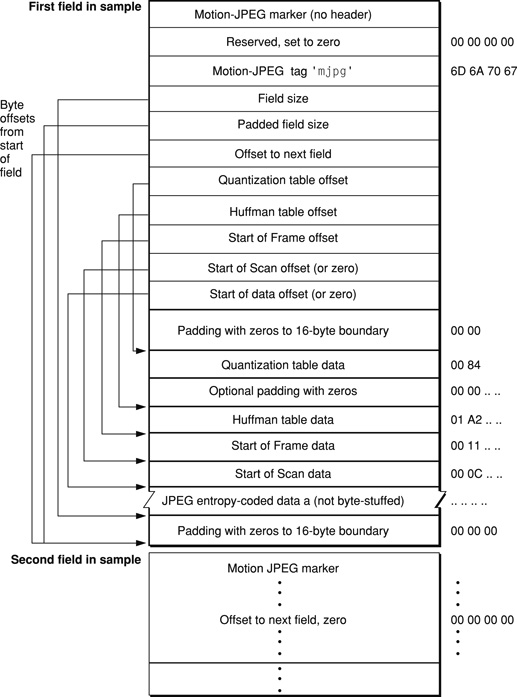

Motion-JPEG format B does not support markers. In place of the marker, therefore, QuickTime inserts a header at the beginning of the bitstream. Again, all of the fields are 32-bit integers.

- Reserved

Unpredictable; should be set to 0.

- Tag

The data type; this field must be set to

'mjpg'.- Field size

The actual size of the image data for this field, in bytes.

- Padded field size

The size of the image data, including pad bytes. Some video hardware may append pad bytes to the image data; this field, along with the field size field, allows you to compute how many pad bytes were added.

- Offset to next field

The offset, in bytes, from the start of the field data to the start of the next field in the bitstream. This field should be set to 0 in the second field’s header data.

- Quantization table offset

The offset, in bytes, from the start of the field data to the quantization table. If this field is set to 0, check the image description for a default quantization table.

- Huffman table offset

The offset, in bytes, from the start of the field data to the Huffman table. If this field is set to 0, check the image description for a default Huffman table.

- Start of frame offset

The offset from the start of the field data to the field’s image data. This field should never be set to 0.

- Start of scan offset

The offset, in bytes, from the start of the field data to the start of scan data.

- Start of data offset

The offset, in bytes, from the start of the field data to the start of the data stream. Typically, this immediately follows the start of scan data.

Note: The last two fields were “reserved, must be set to zero” in the original Motion-JPEG specification.

The Motion-JPEG format B header must be a multiple of 16 in size. When you add pad bytes to the header, set them to 0.

Because Motion-JPEG format B does not support markers, the JPEG bitstream does not have null bytes (0x00) inserted after data bytes that are set to 0xFF.

Sound Media

Sound media

is used to store compressed and uncompressed audio data in QuickTime movies.

It has a media type of 'soun'.

This section describes the sound sample description and the storage

format of sound files using various data formats.

Sound Sample Descriptions

The sound sample description contains information that defines how to interpret sound media data. This sample description is based on the standard sample description, as described in “Sample Description Atoms.”

The data format field contains the format of the audio data This may specify a compression format or one of several uncompressed audio formats. Table 3-7 shows a list of some supported sound formats.

Format |

4-Character code |

Description |

|---|---|---|

Not specified |

0x00000000 |

This format descriptor should not be used, but may be

found in some files. Samples are assumed to be stored in either |

|

|

This format descriptor should not be used, but may be

found in some files. Samples are assumed to be stored in either |

|

|

Samples are stored uncompressed, in offset-binary format (values range from 0 to 255; 128 is silence). These are stored as 8-bit offset binaries. |

|

|

Samples are stored uncompressed, in two’s-complement format (sample values range from -128 to 127 for 8-bit audio, and -32768 to 32767 for 1- bit audio; 0 is always silence). These samples are stored in 16-bit big-endian format. |

|

|

16-bit little-endian, twos-complement |

|

' |

Samples have been compressed using MACE 3:1. (Obsolete.) |

|

' |

Samples have been compressed using MACE 6:1. (Obsolete.) |

|

|

Samples have been compressed using IMA 4:1. |

|

|

32-bit floating point |

|

|

64-bit floating point |

|

|

24-bit integer |

|

|

32-bit integer |

|

uLaw 2:1 |

|

|

|

uLaw 2:1 |

|

|

Microsoft ADPCM-ACM code 2 |

|

|

DVI/Intel IMAADPCM-ACM code 17 |

|

|

DV Audio |

|

|

QDesign music |

|

|

QDesign music version 2 |

|

|

QUALCOMM PureVoice |

|

|

MPEG-1 layer 3, CBR only (pre-QT4.1) |

|

|

MPEG-1 layer 3, CBR & VBR (QT4.1 and later) |

|

|

MPEG-4 audio |

Sound Sample Description (Version 0)

There are currently two versions of the sound sample description,

version 0 and version 1. Version 0 supports only uncompressed audio

in raw ('raw ') or twos-complement

('twos') format, although these are

sometimes incorrectly specified as either 'NONE' or

0x00000000.

- Version

A 16-bit integer that holds the sample description version (currently 0 or 1).

- Revision level

A 16-bit integer that must be set to 0.

- Vendor

A 32-bit integer that must be set to 0.

- Number of channels

A 16-bit integer that indicates the number of sound channels used by the sound sample. Set to 1 for monaural sounds, 2 for stereo sounds. Higher numbers of channels are not supported.

- Sample size (bits)

A 16-bit integer that specifies the number of bits in each uncompressed sound sample. Allowable values are 8 or 16. Formats using more than 16 bits per sample set this field to 16 and use sound description version 1.

- Compression ID

A 16-bit integer that must be set to 0 for version 0 sound descriptions. This may be set to –2 for some version 1 sound descriptions; see “Redefined Sample Tables.”

- Packet size

A 16-bit integer that must be set to 0.

- Sample rate

A 32-bit unsigned fixed-point number (16.16) that indicates the rate at which the sound samples were obtained. The integer portion of this number should match the media’s time scale. Many older version 0 files have values of 22254.5454 or 11127.2727, but most files have integer values, such as 44100. Sample rates greater than 2^16 are not supported.

Version 0 of the sound description format assumes uncompressed

audio in 'raw ' or 'twos' format,

1 or 2 channels, 8 or 16 bits per sample, and a compression ID of

0.

Sound Sample Description (Version 1)

The version field in the sample description is set to 1 for this version of the sound description structure. In version 1 of the sound description, introduced in QuickTime 3, the sound description record is extended by 4 fields, each 4 bytes long, and includes the ability to add atoms to the sound description.

These added fields are used to support out-of-band configuration settings for decompression and to allow some parsing of compressed QuickTime sound tracks without requiring the services of a decompressor.

These fields introduce the idea of a packet. For uncompressed audio, a packet is a sample from a single channel. For compressed audio, this field has no real meaning; by convention, it is treated as 1/number-of-channels.

These fields also introduce the idea of a frame. For uncompressed audio, a frame is one sample from each channel. For compressed audio, a frame is a compressed group of samples whose format is dependent on the compressor.

Important: The value of all these fields has different meaning for compressed and uncompressed audio. The meaning may not be easily deducible from the field name.

The four new fields are:

Samples per packet––the number of uncompressed frames generated by a compressed frame (an uncompressed frame is one sample from each channel). This is also the frame duration, expressed in the media’s timescale, where the timescale is equal to the sample rate. For uncompressed formats, this field is always 1.

Bytes per packet––for uncompressed audio, the number of bytes in a sample for a single channel. This replaces the older sampleSize field, which is set to 16.

This value is calculated by dividing the frame size by the number of channels. The same calculation is performed to calculate the value of this field for compressed audio, but the result of the calculation is not generally meaningful for compressed audio.

Bytes per frame––the number of bytes in a frame: for uncompressed audio, an uncompressed frame; for compressed audio, a compressed frame. This can be calculated by multiplying the bytes per packet field by the number of channels.

Bytes per sample––the size of an uncompressed sample in bytes. This is set to 1 for 8-bit audio, 2 for all other cases, even if the sample size is greater than 2 bytes.

When capturing or compressing audio using the QuickTime API,

the value of these fields can be obtained by calling the Apple Sound

Manager’s GetCompression function. Historically,

the value returned for the bytes per frame field was not always

reliable, however, so this field was set by multiplying bytes per

packet by the number of channels.

To facilitate playback on devices that support only one or two

channels of audio in 'raw ' or 'twos' format

(such as most early Macintosh and Windows computers), all other uncompressed

audio formats are treated as compressed formats, allowing a simple “decompressor”

component to perform the necessary format conversion during playback. The

audio samples are treated as opaque compressed frames for these

data types, and the fields for sample size and bytes per sample

are not meaningful.

The new fields correspond to the CompressionInfo structure

used by the Macintosh Sound Manager (which uses 16-bit values) to

describe the compression ratio of fixed ratio audio compression

algorithms. If these fields are not used, they are set to 0. File

readers only need to check to see if samplesPerPacket is

0.

Redefined Sample Tables

If the compression ID in the sample description is set to –2, the sound track uses redefined sample tables optimized for compressed audio.

Unlike video media, the data structures for QuickTime sound media were originally designed for uncompressed samples. The extended version 1 sound description structure provides a great deal of support for compressed audio, but it does not deal directly with the sample table atoms that point to the media data.

The ordinary sample tables do not point to compressed frames, which are the fundamental units of compressed audio data. Instead, they appear to point to individual uncompressed audio samples, each one byte in size, within the compressed frames. When used with the QuickTime API, QuickTime compensates for this fiction in a largely transparent manner, but attempting to parse the sound samples using the original sample tables alone can be quite complicated.

With the introduction of support for the playback of variable bit-rate (VBR) audio in QuickTime 4.1, the contents of a number of these fields were redefined, so that a frame of compressed audio is treated as a single media sample. The sample-to-chunk and chunk offset atoms point to compressed frames, and the sample size table documents the size of the frames. The size is constant for CBR audio, but can vary for VBR.

The time-to-sample table documents the duration of the frames. If the time scale is set to the sampling rate, which is typical, the duration equals the number of uncompressed samples in each frame, which is usually constant even for VBR (it is common to use a fixed frame duration). If a different media timescale is used, it is necessary to convert from timescale units to sampling rate units to calculate the number of samples.

This change in the meaning of the sample tables allows you to use the tables accurately to find compressed frames.

To indicate that this new meaning is used, a version 1 sound

description is used and the compression ID field is set to –2.

The samplesPerPacket field and

the bytesPerSample field are

not necessarily meaningful for variable bit rate audio, but these

fields should be set correctly in cases where the values are constant; the

other two new fields ( bytesPerPacket and bytesPerFrame)

are reserved and should be set to 0.

If the compression ID field is set to zero, the sample tables describe uncompressed audio samples and cannot be used directly to find and manipulate compressed audio frames. QuickTime has built-in support that allows programmers to act as if these sample tables pointed to uncompressed 1-byte audio samples.

Sound Sample Description Extensions

Version 1 of the sound sample description also defines how

extensions are added to the SoundDescription record.

struct SoundDescriptionV1 { |

// original fields |

SoundDescription desc; |

// fixed compression ratio information |

unsigned long samplesPerPacket; |

unsigned long bytesPerPacket; |

unsigned long bytesPerFrame; |

unsigned long bytesPerSample; |

// optional, additional atom-based fields -- |

// ([long size, long type, some data], repeat) |

}; |

All extensions to the SoundDescription record

are made using atoms. That means one or more atoms can be appended

to the end of the SoundDescription record

using the standard [size, type] mechanism used throughout the QuickTime

movie architecture.

siSlopeAndIntercept Atom

One possible extension to the SoundDescription record

is the siSlopeAndIntercept atom, which contains slope, intercept, minClip,

and maxClip parameters.

At runtime, the contents of the type siSlopeAndIntercept and siDecompressorSettings atoms

are provided to the decompressor component through the standard SetInfo mechanism

of the Sound Manager.

struct SoundSlopeAndInterceptRecord { |

Float64 slope; |

Float64 intercept; |

Float64 minClip; |

Float64 maxClip; |

}; |

typedef struct SoundSlopeAndInterceptRecord SoundSlopeAndInterceptRecord; |

siDecompressionParam atom ('wave')

A second extension is the siDecompressionParam atom,

which provides the ability to store data specific to a given audio

decompressor in the SoundDescription record.

Some audio decompression

algorithms, such as Microsoft’s ADPCM, require a set of out-of-band values

to configure the decompressor. These are stored in an atom of type siDecompressionParam.

This atom contains other atoms with audio decompressor settings and

is a required extension to the sound sample description for MPEG-4

audio. A 'wave' chunk

for 'mp4a' typically

contains (in order) at least a 'frma' atom,

an 'mp4a' atom, an 'esds' atom,

and a terminator atom.

The contents of other siDecompressionParam atoms are

dependent on the audio decompressor.

- Size

An unsigned 32-bit integer holding the size of the decompression parameters atom.

- Type

An unsigned 32-bit field containing the four-character code

'wave'.- Extension atoms

Atoms containing the necessary out-of-band decompression parameters for the sound decompressor. For MPEG-4 audio (

'mp4a'), this includes elementary stream descriptor ('esds'), format ('frma'), and terminator (0x00000000) atoms.

Format atom ('frma')

This atom shows the data format of the stored sound media.

- Size

An unsigned 32-bit integer holding the size of the format atom.

- Type

An unsigned 32-bit field containing the four-character code

'frma'.- Data format

The value of this field is copied from the data-format field of the Sample Description Entry.

Terminator atom (0x00000000)

This atom is present to indicate the end of the sound description. It contains no data, and has a type field of zero (0x00000000) instead of a four-character code.

- Size

An unsigned 32-bit integer holding the size of the decompression parameters atom (always set to 8).

- Type

An unsigned 32-bit integer set to zero (0x00000000). This is a rare instance in which the type field is not a four-character ASCII code.

MPEG-4 Elementary Stream Descriptor ('esds') Atom

This atom is a required extension to the sound sample description for MPEG-4 audio. This atom contains an elementary stream descriptor, which is defined in ISO/IEC FDIS 14496.

- Size

An unsigned 32-bit integer holding the size of the elementary stream descriptor atom

- Type

An unsigned 32-bit field containing the four-character code

'esds'- Version

An unsigned 32-bit field set to zero.

- Elementary Stream Descriptor

An elementary stream descriptor for MPEG-4 audio, as defined in the MPEG-4 specification ISO/IEC 14496.

Sound Sample Data

The format of data stored in sound samples is completely dependent on the type of the compressed data stored in the sound sample description. The following sections discuss some of the formats supported by QuickTime.

Uncompressed 8-Bit Sound

Eight-bit audio is stored in offset-binary encodings. If the data is in stereo, the left and right channels are interleaved.

Uncompressed 16-Bit Sound

Sixteen-bit audio may be stored in two’s-complement encodings. If the data is in stereo, the left and right channels are interleaved.

IMA, uLaw, and aLaw

IMA 4:1

The IMA encoding scheme is based on a standard developed by the International Multimedia Association for pulse code modulation (PCM) audio compression. QuickTime uses a slight variation of the format to allow for random access. IMA is a 16-bit audio format which supports 4:1 compression. It is defined as follows:

kIMACompression = FOUR_CHAR_CODE('ima4'), /*IMA 4:1*/

uLaw 2:1 and aLaw 2:1

The uLaw (mu-law) encoding scheme is used on North American and Japanese phone systems, and is coming into use for voice data interchange, and in PBXs, voice-mail systems, and Internet talk radio (via MIME). In uLaw encoding, 14 bits of linear sample data are reduced to 8 bits of logarithmic data.

The aLaw encoding scheme is used in Europe and the rest of the world.

The kULawCompression and the kALawCompression formats are typically found in

.auformats.

Floating-Point Formats

Both kFloat32Format and kFloat64Format are

floating-point uncompressed formats. Depending upon codec-specific

data associated with the sample description, the floating-point

values may be in big-endian (network) or little-endian (Intel) byte

order. This differs from the 16-bit formats, where there is a single

format for each endian layout.

24- and 32-Bit Integer Formats

Both k24BitFormat and k32BitFormat are

integer uncompressed formats. Depending upon codec-specific data

associated with the sample description, the floating-point values

may be in big-endian (network) or little-endian (Intel) byte order.

kMicrosoftADPCMFormat and kDVIIntelIMAFormat Sound Codecs

The kMicrosoftADPCMFormat and

the kDVIIntelIMAFormat codec

provide QuickTime interoperability with AVI and WAV files. The four-character

codes used by Microsoft for their formats are numeric. To construct

a QuickTime-supported codec format of this type, the Microsoft numeric

ID is taken to generate a four-character code of the form 'msxx' where xx takes

on the numeric ID.

kDVAudioFormat Sound Codec

The DV audio sound codec, kDVAudioFormat,

decodes audio found in a DV stream. Since a DV frame contains both

video and audio, this codec knows how to skip video portions of the

frame and only retrieve the audio portions. Likewise, the video

codec skips the audio portions and renders only the image.

kQDesignCompression Sound Codec

The kQDesignCompression sound

codec is the QDesign 1 (pre-QuickTime 4) format. Note that there

is also a QDesign 2 format whose four-character code is 'QDM2'.

MPEG-1 Layer 3 (MP3) Codecs

The QuickTime MPEG

layer 3 (MP3) codecs come in two particular flavors, as shown in Table 3-7. The

first (kMPEGLayer3Format)

is used exclusively in the constant bitrate (CBR) case

(pre-QuickTime 4). The other (kFullMPEGLay3Format)

is used in both the CBR and variable bitrate (VBR) cases. Note that

they are the same codec underneath.

MPEG-4 Audio

MPEG-4 audio is stored as a sound track with data format 'mp4a' and

certain additions to the sound sample description and sound track

atom. Specifically:

The compression ID is set to -2 and redefined sample tables are used (see “Redefined Sample Tables”).

The sound sample description includes an

siDecompressionParamatom (see “siDecompressionParam atom ('wave')”). ThesiDecompressionParamatom includes:An MPEG-4 elementary stream descriptor extension atom (see “MPEG-4 Elementary Stream Descriptor ('esds') Atom”).

The inclusion of a format atom is strongly recommended. See “Format atom ('frma').”

The last atom in the

siDecompressionParamatom must be a terminator atom. See “Terminator atom (0x00000000).”

Other atoms may be present as well; unknown atoms should be ignored.

The audio data is stored as an elementary MPEG-4 audio stream, as defined in ISO/IEC specification 14496-1.

Formats Not Currently in Use:MACE 3:1 and 6:1

These compression formats are obsolete: MACE 3:1 and 6:1.

These are 8-bit sound codec formats, defined as follows:

kMACE3Compression = FOUR_CHAR_CODE('MAC3'), /*MACE 3:1*/ |

kMACE6Compression = FOUR_CHAR_CODE('MAC6'), /*MACE 6:1*/ |

Timecode Media

Timecode

media is used to store time code data in QuickTime movies. It has

a media type of 'tmcd'.

Timecode Sample Description

The timecode sample description contains information that defines how to interpret time code media data. This sample description is based on the standard sample description header, as described in “Sample Description Atoms.”

The data format field in the sample description is always

set to 'tmcd'.

The timecode media handler also adds some of its own fields to the sample description.

- Reserved

A 32-bit integer that is reserved for future use. Set this field to 0.

- Flags

A 32-bit integer containing flags that identify some timecode characteristics. The following flags are defined.

Drop frame

Indicates whether the timecode is drop frame. Set it to 1 if the timecode is drop frame. This flag’s value is 0x0001.

24 hour max

Indicates whether the timecode wraps after 24 hours. Set it to 1 if the timecode wraps. This flag’s value is 0x0002.

Negative times OK

Indicates whether negative time values are allowed. Set it to 1 if the timecode supports negative values. This flag’s value is 0x0004.

Counter

Indicates whether the time value corresponds to a tape counter value. Set it to 1 if the timecode values are tape counter values. This flag’s value is 0x0008.

- Time scale

A 32-bit integer that specifies the time scale for interpreting the frame duration field.

- Frame duration

A 32-bit integer that indicates how long each frame lasts in real time.

- Number of frames

An 8-bit integer that contains the number of frames per second for the timecode format. If the time is a counter, this is the number of frames for each counter tick.

- Reserved

A 24-bit quantity that must be set to 0.

- Source reference

A user data atom containing information about the source tape. The only currently used user data list entry is the

'name'type. This entry contains a text item specifying the name of the source tape.

Timecode Media Information Atom

The timecode media also requires a media information atom.

This atom contains information governing how the timecode text is

displayed. This media information atom is stored in a base media

information atom (see “Base Media Information Atoms” for

more information). The type of the timecode media information atom

is 'tcmi'.

The timecode media information atom contains the following fields:

- Size

A 32-bit integer that specifies the number of bytes in this time code media information atom.

- Type

A 32-bit integer that identifies the atom type; this field must be set to

'tcmi'.- Version

A 1-byte specification of the version of this timecode media information atom.

- Flags

A 3-byte space for timecode media information flags. Set this field to 0.

- Text font

A 16-bit integer that indicates the font to use. Set this field to 0 to use the system font. If the font name field contains a valid name, ignore this field.

- Text face

A 16-bit integer that indicates the font’s style. Set this field to 0 for normal text. You can enable other style options by using one or more of the following bit masks:

0x0001 Bold

0x0002 Italic

0x0004 Underline

0x0008 Outline

0x0010 Shadow

0x0020 Condense

0x0040 Extend

- Text size

A 16-bit integer that specifies the point size of the time code text.

- Text color

A 48-bit RGB color value for the timecode text.

- Background color

A 48-bit RGB background color for the timecode text.

- Font name

A Pascal string specifying the name of the timecode text’s font.

Timecode Sample Data

There are two different sample data formats used by timecode media.

If the Counter flag is set to 1 in the timecode sample description, the sample data is a counter value. Each sample contains a 32-bit integer counter value.

If the Counter flag is set to 0 in the timecode sample description, the sample data format is a timecode record, as follows.

- Hours

An 8-bit unsigned integer that indicates the starting number of hours.

- Negative

A 1-bit value indicating the time’s sign. If bit is set to 1, the timecode record value is negative.

- Minutes

A 7-bit integer that contains the starting number of minutes.

- Seconds

An 8-bit unsigned integer indicating the starting number of seconds.

- Frames

An 8-bit unsigned integer that specifies the starting number of frames. This field’s value cannot exceed the value of the number of frames field in the timecode sample description.

Text Media

Text

media is used to store text data in QuickTime movies. It has a media

type of 'text'.

Text Sample Description

The text sample description contains information that defines how to interpret text media data. This sample description is based on the standard sample description header, as described in “Sample Description Atoms.”

The data format field in the sample description is always

set to 'text'.

The text media handler also adds some of its own fields to the sample description.

- Display flags

A 32-bit integer containing flags that describe how the text should be drawn. The following flags are defined.

Don’t auto scale

Controls text scaling. If this flag is set to 1, the text media handler reflows the text instead of scaling when the track is scaled. This flag’s value is 0x0002.

Use movie background color

Controls background color. If this flag is set to 1, the text media handler ignores the background color field in the text sample description and uses the movie’s background color instead. This flag’s value is 0x0008.

Scroll in

Controls text scrolling. If this flag is set to 1, the text media handler scrolls the text until the last of the text is in view. This flag’s value is 0x0020.

Scroll out

Controls text scrolling. If this flag is set to 1, the text media handler scrolls the text until the last of the text is gone. This flag’s value is 0x0040.

Horizontal scroll

Controls text scrolling. If this flag is set to 1, the text media handler scrolls the text horizontally; otherwise, it scrolls the text vertically. This flag’s value is 0x0080.

Reverse scroll

Controls text scrolling. If this flag is set to 1, the text media handler scrolls down (if scrolling vertically) or backward (if scrolling horizontally; note that horizontal scrolling also depends upon text justification). This flag’s value is 0x0100.

Continuous scroll

Controls text scrolling. If this flag is set to 1, the text media handler displays new samples by scrolling out the old ones. This flag’s value is 0x0200.

Drop shadow

Controls drop shadow. If this flag is set to 1, the text media handler displays the text with a drop shadow. This flag’s value is 0x1000.

Anti-alias

Controls anti-aliasing. If this flag is set to 1, the text media handler uses anti-aliasing when drawing text. This flag’s value is 0x2000.

Key text

Controls background color. If this flag is set to 1, the text media handler does not display the background color, so that the text overlay background tracks. This flag’s value is 0x4000.

- Text justification

A 32-bit integer that indicates how the text should be aligned. Set this field to 0 for left-justified text, to 1 for centered text, and to –1 for right-justified text.

- Background color

A 48-bit RGB color that specifies the text’s background color.

- Default text box

A 64-bit rectangle that specifies an area to receive text (top, left, bottom, right). Typically this field is set to all zeros.

- Reserved

A 64-bit value that must be set to 0.

- Font number

A 16-bit value that must be set to 0.

- Font face

A 16-bit integer that indicates the font’s style. Set this field to 0 for normal text. You can enable other style options by using one or more of the following bit masks:

0x0001 Bold

0x0002 Italic

0x0004 Underline

0x0008 Outline

0x0010 Shadow

0x0020 Condense

0x0040 Extend

- Reserved

An 8-bit value that must be set to 0.

- Reserved

A 16-bit value that must be set to 0.

- Foreground color

A 48-bit RGB color that specifies the text’s foreground color.

- Text name

A Pascal string specifying the name of the font to use to display the text.

Text Sample Data

The format of the text data is a 16-bit length word followed by the actual text. The length word specifies the number of bytes of text, not including the length word itself. Following the text, there may be one or more atoms containing additional information for drawing and searching the text.

Table 3-8 lists the currently defined text sample extensions.

Text sample extension |

Description |

|---|---|

|

Style information for the text. Allows you to override the default style in the sample description or to define more than one style for a sample. The data is a TextEdit style scrap. |

|

Table of font names. Each table entry contains a font

number (stored in a 16-bit integer) and a font name (stored in a

Pascal string).This atom is required if the |

|

Highlight information. The atom data consists of two 32-bit integers. The first contains the starting offset for the highlighted text, and the second has the ending offset. A highlight sample can be in a key frame or in a differenced frame. When it’s used in a differenced frame, the sample should contain a zero-length piece of text. |

|

Highlight color. This atom specifies the 48-bit RGB color to use for highlighting. |

|

Drop shadow offset. When the display flags indicate drop shadow style, this atom can be used to override the default drop shadow placement. The data consists of two 16-bit integers. The first indicates the horizontal displacement of the drop shadow, in pixels; the second, the vertical displacement. |

|

Drop shadow transparency. The data is a 16-bit integer between 0 and 256 indicating the degree of transparency of the drop shadow. A value of 256 makes the drop shadow completely opaque. |

|

Image font data. This atom contains two more atoms. An |

|

Image font highlighting. This atom contains metric information that

governs highlighting when an |

Hypertext and Wired Text

Hypertext

is used as an action that takes you to a Web URL; like a Web URL,

it appears blue and underlined. Hypertext is stored in a text track

sample atom stream as type 'htxt'. The

same mechanism is used to store wired actions linked to text strings.

A text string can be wired to act as a hypertext link when clicked

or to perform any defined QuickTime wired action when clicked. For

details on wired actions, see “Wired Action Grammar.”

The data stored is a QTAtomContainer.

The root atom of hypertext in this container is a wired-text atom of

type 'wtxt'.

This is the parent for all individual hypertext objects.

For each hypertext item, the parent atom is of type 'htxt'.

This is the atom container atom type. Two children of this atom

that define the offset of the hypertext in the text stream are

kRangeStart strt // unsigned long |

kRangeEnd end // unsigned long |

Child atoms of the parent atom are the events of type kQTEventType and

the ID of the event type. The children of these event atoms follow

the same format as other wired events.

kQTEventType, (kQTEventMouseClick, kQTEventMouseClickEnd, |

kQTEventMouseClickEndTriggerButton, |

kQTEventMouseEnter, kQTEventMouseExit) |

... |

kTextWiredObjectsAtomType, 1 |

kHyperTextItemAtomType, 1..n |

kRangeStart, 1 |

long |

kRangeEnd, 1 |

long |

kAction // The known range of track movie sprite actions |

Music Media

Music

media is used to store note-based audio data, such as MIDI data,

in QuickTime movies. It has a media type of 'musi'.

Music Sample Description

The music sample description uses the standard sample description header, as described in the section “Sample Description Atoms.”

The data format field in the sample description is always

set to 'musi'. The music

media handler adds an additional 32-bit integer field to the sample

description containing flags. Currently no flags are defined, and

this field should be set to 0.

Following the flags field, there may be appended data in the QuickTime music format. This data consists of part-to-instrument mappings in the form of General events containing note requests. One note request event should be present for each part that will be used in the sample data.

Music Sample Data

The sample data for music samples consists entirely of data in the QuickTime music format. Typically, up to 30 seconds of notes are grouped into a single sample.

MPEG-1 Media

MPEG-1 media

is used to store MPEG-1 video streams, MPEG-1, layer 2 audio streams, and

multiplexed MPEG-1 audio and video streams in QuickTime movies.

It has a media type of 'MPEG'.

MPEG-1 Sample Description

The MPEG-1 sample description uses the standard sample description header, as described in “Sample Description Atoms.”

The data format field in the sample description is always

set to 'MPEG'. The MPEG-1 media handler

adds no additional fields to the sample description.

Note: This data format is not used for MPEG-1, layer 3 audio, however (see “MPEG-1 Layer 3 (MP3) Codecs”).

MPEG-1 Sample Data

Each sample in an MPEG-1 media is an entire MPEG-1 stream. This means that a single MPEG-1 sample may be several hundred megabytes in size. The MPEG-1 encoding used by QuickTime corresponds to the ISO standard, as described in ISO document CD 11172.

Sprite Media

Sprite

media is used to store character-based animation data in QuickTime

movies. It has a media type of 'sprt'.

Sprite Sample Description

The sprite sample description uses the standard sample description header, as described in “Sample Description Atoms.”

The data format field in the sample description is always

set to 'sprt'.

The sprite media handler adds no additional fields to the sample

description.

Sprite Sample Data

All sprite samples are stored in QT atom structures. The sprite media uses both key frames and differenced frames. The key frames contain all of the sprite’s image data, and the initial settings for each of the sprite’s properties.

A key frame always contains a shared data atom of type 'dflt'.

This atom contains data to be shared between the sprites, consisting

mainly of image data and sample descriptions. The shared data atom

contains a single sprite image container atom, with an atom type value

of 'imct' and an ID value

of 1.

The sprite image container atom stores one or more sprite

image atoms of type 'imag'. Each

sprite image atom contains an image sample description immediately

followed by the sprite’s compressed image data. The sprite image

atoms should have ID numbers starting at 1 and counting consecutively

upward.

The key frame also must contain definitions for each sprite

in atoms of type 'sprt'.

Sprite atoms should have ID numbers start at 1 and count consecutively

upward. Each sprite atom contains a list of properties. Table 3-9 shows

all currently defined sprite properties.

Property name |

Value |

Description |

|---|---|---|

|

1 |

Describes the sprite’s location and scaling within its sprite world or sprite track. By modifying a sprite’s matrix, you can modify the sprite’s location so that it appears to move in a smooth path on the screen or so that it jumps from one place to another. You can modify a sprite’s size, so that it shrinks, grows, or stretches. Depending on which image compressor is used to create the sprite images, other transformations, such as rotation, may be supported as well. Translation-only matrices provide the best performance. |

|

4 |

Specifies whether or not the sprite is visible. To make

a sprite visible, you set the sprite’s visible property to |

|

5 |

Contains a 16-bit integer value specifying the layer

into which the sprite is to be drawn. Sprites with lower layer numbers

appear in front of sprites with higher layer numbers. To designate

a sprite as a background sprite, you should assign it the special layer

number |

|

6 |

Specifies a graphics mode and blend color that indicates

how to blend a sprite with any sprites behind it and with the background.

To set a sprite’s graphics mode, you call |

|

8 |

Specifies another sprite by ID that delegates QT events. |

|

100 |

Contains the atom ID of the sprite’s image atom. |

The override sample differs from the key frame sample in two ways. First, the override sample does not contain a shared data atom. All shared data must appear in the key frame. Second, only those sprite properties that change need to be specified. If none of a sprite’s properties change in a given frame, then the sprite does not need an atom in the differenced frame.

The override sample can be used in one of two ways: combined, as with video key frames, to construct the current frame; or the current frame can be derived by combining only the key frame and the current override sample.

Refer to the section “Sprite Track Media Format” for

information on how override samples are indicated in the file, using kSpriteTrackPropertySampleFormat and

the default behavior of the kKeyFrameAndSingleOverride format.

Sprite Track Properties

In addition to defining properties for individual sprites, you can also define properties that apply to an entire sprite track. These properties may override default behavior or provide hints to the sprite media handler. The following sprite track properties are supported:

The

kSpriteTrackPropertyBackgroundColorproperty specifies a background color for the sprite track. The background color is used for any area that is not covered by regular sprites or background sprites. If you do not specify a background color, the sprite track uses black as the default background color.The

kSpriteTrackPropertyOffscreenBitDepthproperty specifies a preferred bit depth for the sprite track’s offscreen buffer. The allowable values are 8 and 16. To save memory, you should set the value of this property to the minimum depth needed. If you do not specify a bit depth, the sprite track allocates an offscreen buffer with the depth of the deepest intersecting monitor.The

kSpriteTrackPropertySampleFormatproperty specifies the sample format for the sprite track. If you do not specify a sample format, the sprite track uses the default format,kKeyFrameAndSingleOverride.

To specify sprite track properties, you create a single QT

atom container and add a leaf atom for each property you want to

specify. To add the properties to a sprite track, you call the media

handler function SetMediaPropertyAtom.

To retrieve a sprite track’s properties, you call the media handler

function GetMediaPropertyAtom.

The sprite track properties and their corresponding data types are listed in Table 3-10.

Atom type |

Atom ID |

Leaf data type |

|---|---|---|

|

1 |

|

|

1 |

|

|

1 |

|

|

1 |

|

|

1 |

|

|

1 |

|

|

1 |

|

Note: When pasting portions of two different tracks together, the Movie Toolbox checks to see that all sprite track properties match. If, in fact, they do match, the paste results in a single sprite track instead of two.

Sprite Track Media Format

The sprite track media format is hierarchical and based on QT atoms and atom containers. A sprite track is defined by one or more key frame samples, each followed by any number of override samples. A key frame sample and its subsequent override samples define a scene in the sprite track. A key frame sample is a QT atom container that contains atoms defining the sprites in the scene and their initial properties. The override samples are other QT atom containers that contain atoms that modify sprite properties, thereby animating the sprites in the scene. In addition to defining properties for individual sprites, you can also define properties that apply to an entire sprite track.

Figure 3-13 shows the high-level structure of a sprite track key frame sample. Each atom in the atom container is represented by its atom type, atom ID, and, if it is a leaf atom, the type of its data.

The QT atom container contains one child atom for each sprite

in the key frame sample. Each sprite atom has a type of kSpriteAtomType.

The sprite IDs are numbered from 1 to the number of sprites defined

by the key frame sample (numSprites).

Each sprite atom contains leaf atoms that define the properties

of the sprite, as shown in Figure 3-14. For example, the kSpritePropertyLayer property

defines a sprite’s layer. Each sprite property atom has an atom

type that corresponds to the property and an ID of 1.

In addition to the sprite atoms, the QT atom container contains

one atom of type kSpriteSharedDataAtomType with

an ID of 1. The atoms contained by the shared data atom describe

data that is shared by all sprites. The shared data atom contains

one atom of type kSpriteImagesContainerAtomType with an ID of 1

(Figure 3-15).

The image container atom contains one atom of type kImageAtomType for

each image in the key frame sample. The image atom IDs are numbered

from 1 to the number of images (numImages).

Each image atom contains a leaf atom that holds the image data (type kSpriteImageDataAtomType)

and an optional leaf atom (type kSpriteNameAtomType)

that holds the name of the image.

Sprite Media Format Atoms

The sprite track’s sample format enables you to store the atoms necessary to describe action lists that are executed in response to QuickTime events. “QT Atom Container Description Key” defines a grammar for constructing valid action sprite samples, which may include complex expressions.

Both key frame samples and override samples support the sprite

action atoms. Override samples override actions at the QuickTime

event level. In effect, what you do by overriding is to completely

replace one event handler and all its actions with another. The sprite

track’s kSpriteTrackPropertySampleFormat property

has no effect on how actions are performed. The behavior is similar

to the default kKeyFrameAndSingleOverride format where,

if in a given override sample there is no handler for the event,

the key frame’s handler is used, if there is one.

Sprite Media Format Extensions

This section describes some of the atom types and IDs used to extend the sprite track’s media format, thus enabling action sprite capabilities.

A complete description of the grammar for sprite media handler samples, including action sprite extensions, is included in the section “Sprite Media Handler Track Properties QT Atom Container Format.”

Important:

Some sprite track property atoms were added in QuickTime