What is Core Audio?

Core Audio is designed to handle all audio needs in Mac OS X. You can use Core Audio to generate, record, mix, edit, process, and play audio. Using Core Audio, you can also generate, record, process, and play MIDI data, interfacing with both hardware and software MIDI instruments.

Core Audio combines C programming interfaces with tight system integration, resulting in a flexible programming environment that still maintains low latency through the signal chain. Some of Core Audio's benefits include:

Plug-in interfaces for audio synthesis and audio digital signal processing (DSP)

Built in support for reading and writing a wide variety of audio file and data formats

Plug-in interfaces for handling custom file and data formats

A modular approach for constructing signal chains

Scalable multichannel input and output

Easy synchronization of audio and MIDI data during recording or playback

A standardized interface to all built-in and external hardware devices, regardless of connection type (USB, Firewire, PCI, and so on)

Note: Although Core Audio uses C interfaces, you can call Core Audio functions from Cocoa applications and Objective-C code.

In this section:

Core Audio in Mac OS X

A Core Audio Recording Studio

Development Using the Core Audio SDK

Core Audio in Mac OS X

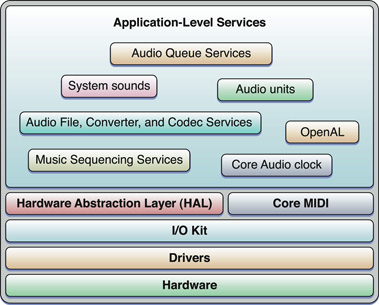

Core Audio is tightly integrated into Mac OS X. The majority of Core Audio services are layered on top of the Hardware Abstraction Layer (HAL), and Core MIDI as shown in Figure 1-1. Audio signals pass to and from hardware through the HAL, while Core MIDI provides a similar interface for MIDI data and MIDI devices.

The sections that follow describe some of the essential features of Core Audio:

A Little About Digital Audio and Linear PCM

As you might expect, Core Audio handles audio data in a digital format. Most Core Audio constructs manipulate audio data in linear pulse-code-modulated (linear PCM) format, which is the most common uncompressed data format for digital audio. Pulse code modulation relies on measuring an audio signal's magnitude at regular intervals (the sampling rate) and converting each sample into a numerical value. This value varies linearly with the signal amplitude. For example, standard CD audio has a sampling rate of 44.1 kHz and uses 16 bits to represent the signal amplitude (65,536 possible values). Core Audio’s data structures can describe linear PCM at any sample rate and bit depth, supporting both integer and floating-point sample values.

Core Audio generally expects audio data to be in native-endian 32-bit floating-point linear PCM format. However, you can create audio converters to translate audio data between different linear PCM variants. You also use these converters to translate between linear PCM and compressed audio formats such as MP3 and Apple Lossless. Core Audio supplies codecs to translate most common digital audio formats (though it does not supply an encoder for converting to MP3).

Core Audio also supports most common file formats for storing audio data.

Audio Units

Audio units are plug-ins that handle audio data.

Within a Mac OS X application, almost all processing and manipulation of audio data can be done through audio units (though this is not a requirement). Some units are strictly available to simplify common tasks for developers (such as splitting a signal or interfacing with hardware), while others appear onscreen as signal processors that users can insert into the signal path. For example, software-based effect processors often mimic their real-world counterparts (such as distortion boxes) onscreen. Some audio units generate signals themselves, whether programmatically or in response to MIDI input. Some examples of audio unit implementations include the following:

A signal processor (for example, high-pass filter, flanger, compressor, or distortion box). An effect unit performs audio digital signal processing (DSP) and is analogous to an effects box or outboard signal processor.

A musical instrument or software synthesizer. These instrument units (sometimes called music device audio units) typically generate musical tones in response to MIDI input). An instrument unit can interpret MIDI data from a file or an external MIDI device.

A signal source. A generator unit lets you implement an audio unit as a signal generator. Unlike an instrument unit, a generator unit is not activated by MIDI input but rather through code. For example, a generator unit might calculate and generate sine waves, or it might source the data from a file or over a network.

An interface to hardware input or output. For more information, see “The Hardware Abstraction Layer” and “Interfacing with Hardware Devices.”

A signal converter, which uses an audio converter to convert between various linear PCM variants. See “Audio Converters and Codecs” for more details.

A mixer or splitter. For example, a mixer unit can mix down tracks or apply stereo or 3D panning effects. A splitter unit might transform a mono signal into simulated stereo by splitting the signal into two channels and applying comb filtering.

An offline effect unit. Offline effects are either too processor-intensive or simply impossible to apply in real time. For example, a effect that reverses the samples in a file (resulting in the music being played backward) must be applied offline.

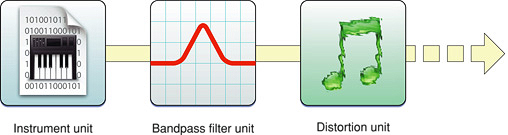

Because audio units are modular, you can mix and match them in whatever permutations you or the end user requires. Figure 1-2 shows a simple chain of audio units. This chain uses an instrument unit to generate an audio signal, which is then passed through effect units to apply bandpass filtering and distortion.

If you develop audio DSP code that you want to make available to a wide variety of applications, you should package them as audio units.

If you are an audio application developer, supporting audio units lets you leverage the library of existing audio units (both third-party and Apple-supplied) to extend the capabilities of your application.

Apple ships a number of audio units to accomplish common tasks, such as filtering, delay, reverberation, and mixing, as well as units to represent input and output devices (for example, units that allow audio data to be transmitted and received over a network). See “System-Supplied Audio Units” for a listing of the audio units that ship with Mac OS X v10.4.

For a simple way to experiment with audio units, see the AU Lab application, available in the Xcode Tools install at /Developer/Applications/Audio. AU Lab allows you to mix and match various audio units. In this way, you can build a signal chain from an audio source through an output device.

The Hardware Abstraction Layer

Core Audio uses a hardware abstraction layer (HAL) to provide a consistent and predictable interface for applications to interact with hardware. The HAL can also provide timing information to your application to simplify synchronization or to adjust for latency.

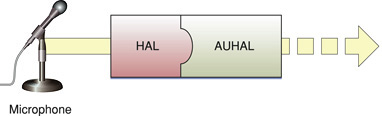

In most cases, you do not even have to interact directly with the HAL. Apple supplies a special audio unit, called the AUHAL, which allows you to pass audio data directly from another audio unit to a hardware device. Similarly, input coming from a hardware device is also routed through the AUHAL to be made available to subsequent audio units, as shown in Figure 1-3.

The AUHAL also takes care of any data conversion or channel mapping required to translate audio data between audio units and hardware.

MIDI Support

Core MIDI is the part of Core Audio that supports the MIDI protocol. Core MIDI allows applications to communicate with MIDI devices such as keyboards and guitars. Input from MIDI devices can be either stored as MIDI data or translated through an instrument unit into an audio signal. Applications can also output information to MIDI devices. Core MIDI uses abstractions to represent MIDI devices and mimic standard MIDI cable connections (MIDI In, MIDI Out, and MIDI Thru) while providing low-latency I/O. Core Audio also supports a music player programming interface that you can use to play MIDI data.

For more details about the capabilities of the MIDI protocol, see the MIDI Manufacturers Association site, www.midi.org.

The Audio MIDI Setup Application

When using audio in Mac OS X, both users and developers can use the Audio MIDI Setup application to configure audio and MIDI settings. You can use Audio MIDI Setup to:

Specify the default audio input and output devices.

Configure properties for input and output devices, such as the sampling rate and bit depth.

Map audio channels to available speakers (for stereo, 5.1 surround, and so on).

Create aggregate devices. (For information about aggregate devices, see “Using Aggregate Devices.”)

Configure MIDI networks and MIDI devices.

You can find the Audio MIDI Setup application in the /Applications/Utilities folder in Mac OS X v10.2 and later.

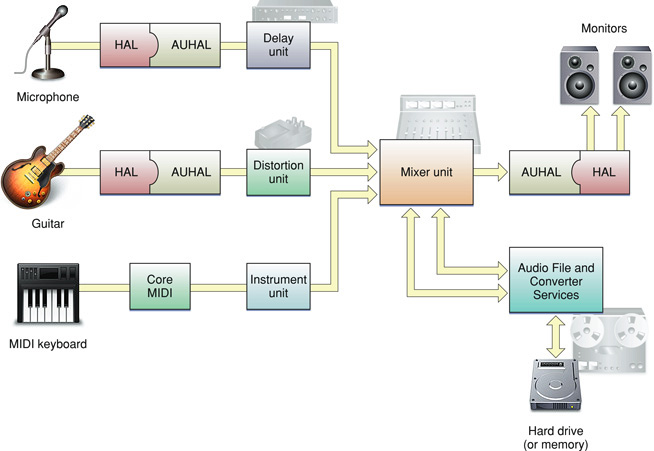

A Core Audio Recording Studio

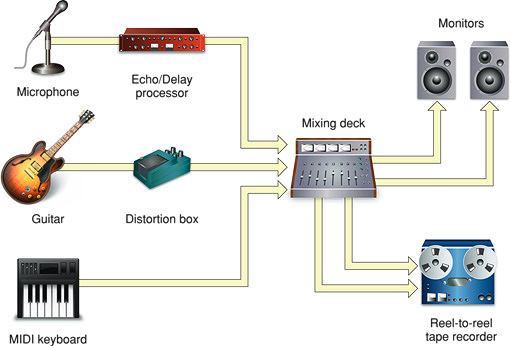

Although Core Audio encompasses much more than recording and playing back audio, it can be useful to compare its capabilities to elements in a recording studio. A simple hardware-based recording studio may have a few instruments with some effect units feeding into a mixing deck, along with audio from a MIDI keyboard, as shown in Figure 1-4. The mixer can output the signal to studio monitors as well as a recording device, such as a tape deck (or perhaps a hard drive).

Much of the hardware used in a studio can be replaced by software-based equivalents. Specialized music studio applications can record, synthesize, edit, mix, process, and play back audio. They can also record, edit, process, and play back MIDI data, interfacing with both hardware and software MIDI instruments. In Mac OS X, applications rely on Core Audio services to handle all of these tasks, as shown in Figure 1-5.

As you can see, audio units make up much of the signal chain. Other Core Audio interfaces provide application-level support, allowing applications to obtain audio or MIDI data in various formats and output it to files or output devices. “Core Audio Programming Interfaces” discusses the constituent interfaces of Core Audio in more detail.

However, Core Audio lets you do much more than mimic a recording studio on a computer. You can use Core Audio for everything from playing simple system sounds to creating compressed audio files to providing an immersive sonic experience for game players.

Development Using the Core Audio SDK

To assist audio developers, Apple supplies a software development kit (SDK) for Core Audio. The SDK contains many code samples covering both audio and MIDI services as well as diagnostic tools and test applications. Examples include:

A test application to interact with the global audio state of the system, including attached hardware devices. (

HALLab)Host applications that load and manipulate audio units. (

AudioUnitHosting.) Note that for the actual testing of audio units, you should use the AU Lab application mentioned in “Audio Units.”Sample code to load and play audio files (

PlayFile) and MIDI files (PlaySequence)

This document points to additional examples in the Core Audio SDK that illustrate how to accomplish common tasks.

The SDK also contains a C++ framework for building audio units. This framework simplifies the amount of work you need to do by insulating you from the details of the Component Manager plug-in interface. The SDK also contains templates for common audio unit types; for the most part, you only need override those methods that apply to your custom audio unit. Some sample audio unit projects show these templates and frameworks in use. For more details on using the framework and templates, see Audio Unit Programming Guide.

Note: Apple supplies the C++ framework as sample code to assist audio unit development. Feel free to modify the framework based on your needs.

The Core Audio SDK assumes you will use Xcode as your development environment.

You can download the latest SDK from http://developer.apple.com/sdk/. After installation, the SDK files are located in /Developer/Examples/CoreAudio.

© 2007 Apple Inc. All Rights Reserved. (Last updated: 2007-01-08)