About Video Digitizer Components

Video digitizer components convert video input into a digitized color image that is compatible with the graphics system of a computer. For example, a video digitizer may convert input analog video into a specified digital format. The input may be any video format and type, whereas the output must be intelligible to the computer’s display system. Once the digitizer has converted the input signal to an appropriate digital format, it then prepares the image for display by resizing the image, performing necessary color conversions, and clipping to the output window. At the end of this process, the digitizer component places the converted image into a buffer you specify. If that buffer is the current frame buffer, the image appears on the user’s computer screen.

In this section:

Analog-to-Digital Conversion

Types of Video Digitizer Components

Source Coordinate Systems

Using Video Digitizer Components

Controlling Compressed Source Devices

Analog-to-Digital Conversion

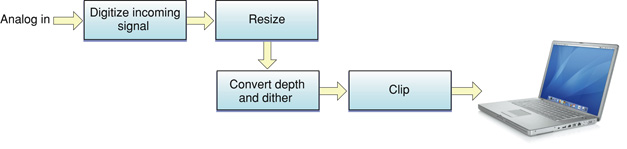

Figure 8-1 shows the steps involved in converting the analog video signal to digital format and preparing the digital data for display. Some video digitizer components perform all these steps in hardware. Others perform some or all of these steps in software. Others may perform only a few of these steps, in which case it is up to the program that is using the video digitizer to perform the remaining tasks.

Video digitizer components resize the image by applying a transformation matrix to the digitized image. Your application specifies the matrix that is applied to the image. Matrix operations can enlarge or shrink an image, distort the image, or move the location of an image. The Movie Toolbox provides a set of functions that make it easy for you to work with transformation matrices.

Before the digitized image can be displayed on your computer, the video digitizer component must convert the image into an appropriate color representation. This conversion may involve dithering or pixel depth conversion. The digitizer component handles this conversion based on the destination characteristics you specify.

Video digitizer components may support clipping. Digitizers that do support clipping can display the resulting image in regions of arbitrary shapes. See Clipping for a discussion of the techniques that digitizer components can use to perform clipping.

Types of Video Digitizer Components

Video digitizer components fall into four categories, distinguished by their support for clipping a digitized video image:

basic digitizers, which do not support clipping

alpha channel digitizers, which clip by means of an alpha channel

mask plane digitizers, which clip by means of a mask plane

key color digitizers, which clip by means of key colors

Basic video digitizer components are capable of placing the digitized video into memory, but they do not support any graphics overlay or video blending. If you want to perform these operations, you must do so in your application. For example, you can stop the digitizer after each frame and do the work necessary to blend the digitized video with a graphics image that is already being displayed. Unfortunately, this may cause jerkiness or discontinuity in the video stream. Other types of digitizers that support clipping make this operation much easier for your application.

Alpha channel digitizer components use a portion of each display pixel to represent the blending of video and graphical image data. This part of each pixel is referred to as an alpha channel. The size of the alpha channel differs depending upon the number of bits used to represent each pixel. For 32 bits per pixel modes, the alpha channel is represented in the 8 high-order bits of each 32-bit pixel. These 8 bits can define up to 256 levels of blend. For 16 bits per pixel modes, the alpha channel is represented in the high-order bit of the pixel and defines one level of blend (on or off).

Mask plane digitizer components use a pixel map to define blending. Values in this mask correspond to pixels on the screen, and they define the level of blend between video and graphical image data.

Key color digitizer components determine where to display video data based upon the color currently being displayed on the output device. These digitizers reserve one or more colors in the color table; these colors define where to display video. For example, if blue is reserved as the key color, the digitizer replaces all blue pixels in the display rectangle with the corresponding pixels of video from the input video source.

Source Coordinate Systems

Your application can control what part of the source video image is extracted. The digitizer then converts the specified portion of the source video signal into a digital format for your use. Video digitizer components define four areas you may need to manipulate when you define the source image for a given operation. These areas are

the maximum source rectangle

the active source rectangle

the vertical blanking rectangle

the digitizer rectangle

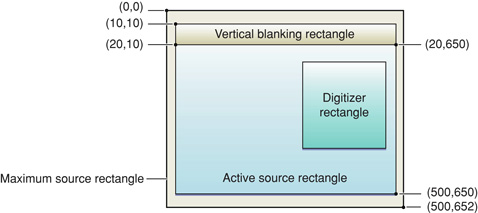

Figure 8-2 shows the relationships between these rectangles.

The maximum source rectangle defines the maximum source area that the digitizer component can grab. This rectangle usually encompasses both the vertical and horizontal blanking areas. The active source rectangle defines that portion of the maximum source rectangle that contains active video. The vertical blanking rectangle defines that portion of the input video signal that is devoted to vertical blanking. This rectangle occupies lines 10 through 19 of the input signal. Broadcast video sources may use this portion of the input signal for closed captioning, teletext, and other nonvideo information. Note that the blanking rectangle might not be contained in the maximum source rectangle.

You specify the digitizer rectangle, which defines that portion of the active source rectangle that you want to capture and convert.

Using Video Digitizer Components

This section describes how you can control a video digitizer component. It is divided into the following topics:

Specifying Destinations discusses how you tell the digitizer where to put the converted video data.

Starting and Stopping the Digitizer discusses how you control digitization.

Multiple Buffering describes a technique for improving performance.

Obtaining an Accurate Time of Frame Capture tells how the sequence grabber usually supplies video digitizers with a time base. This time base lets your application get an accurate time for the capture of any specified frame.

Specifying Destinations

Video digitizer components provide several functions that allow applications to specify the destination for the digitized video stream produced by the digitizer component. You have two options for specifying the destination for the video data stream in your application.

The first option requires that the video be digitized as RGB pixels and placed into a destination pixel map. This option allows the video to be placed either onscreen or offscreen, depending upon the placement of the pixel map. Your application can use the

VDSetPlayThruDestinationfunction to set the characteristics for this option. Your application can use theVDPreflightDestinationfunction to determine the capabilities of the digitizer. All video digitizer components must support this option.The second option uses a global boundary rectangle to define the destination for the video. This option always results in onscreen images and is useful with digitizers that support hardware direct memory access (DMA) across multiple screens. The digitizer component is responsible for any required color depth conversions, image clipping and resizing, and so on. Your application can use the

VDSetPlayThruGlobalRectfunction to set the characteristics for this option. Your application can use theVDPreflightGlobalRectfunction to determine the capabilities of the digitizer. Not all video digitizer components support this option.

Setting Video Destinations

Video digitizer components provide several functions that allow applications to specify the destination for the digitized video stream produced by the digitizer component. Applications have two options for specifying the destination for the video data stream:

The first option requires that the video be digitized as RGB pixels and placed into a destination pixel map. This option allows the video to be placed either onscreen or offscreen, depending upon the placement of the pixel map. You can use the

VDSetPlayThruDestinationfunction in your application to set the characteristics for this option. TheVDPreflightDestinationfunction lets you determine the capabilities of the digitizer in your application. All video digitizer components must support this option. TheVDGetPlayThruDestinationfunction lets you get data about the current video destination.The second option uses a global boundary rectangle to define the destination for the video. This option is useful only with digitizers that support hardware DMA. You can use the

VDSetPlayThruGlobalRectfunction in your application to set the characteristics for this option. You can use theVDPreflightGlobalRectfunction in your application to determine the capabilities of the digitizer. Not all video digitizer components support this option.

The VDGetMaxAuxBuffer function returns information about a buffer that may be located on some special hardware.

Starting and Stopping the Digitizer

You can control digitization on a frame-by-frame basis in your application. The VDGrabOneFrame function digitizes a single video frame. All video digitizer components support this function.

Alternatively, you can use the VDSetPlayThruOnOff function to enable or disable digitization. When digitization is enabled, the video digitizer component places video into the specified destination continuously. The application stops the digitizer by disabling digitization. This function can be used with both destination options. However, not all video digitizer components support this function.

Controlling Digitization

This section describes the video digitizer component functions that allow applications to control video digitization.

Video digitizer components allow applications to start and stop the digitizing process. Your application can request continuous digitization or single-frame digitization. When a digitizer component is operating continuously, it automatically places successive frames of digitized video into the specified destination. When a digitizer component works with a single frame at a time, the application and other software, such as an image compressor component, control the speed at which the digitized video is processed.

You can use the VDSetPlayThruOnOff function in your application to enable or disable digitization. When digitization is enabled, the video digitizer component places digitized video frame into the specified destination continuously. The application stops the digitizer by disabling digitization. This function can be used with both destination options.

Alternatively, your application can control digitization on a frame-by-frame basis. The VDGrabOneFrame and VDGrabOneFrameAsync functions digitize a single video frame; VDGrabOneFrame works synchronously, returning control to your application when it has obtained a complete frame, while VDGrabOneFrameAsync works asynchronously. The VDDone function helps you to determine when the VDGrabOneFrameAsync function is finished with a video frame. Your application can define the buffers for use with asynchronous digitization by calling the VDSetupBuffers function. Free the buffers by calling the VDReleaseAsyncBuffers function.

The VDSetFrameRate function allows applications to control the digitizer’s frame rate. The VDGetDataRate function returns the digitizer’s current data rate.

Multiple Buffering

You can improve the performance of frame-by-frame digitization by using multiple destination buffers for the digitized video. Your application defines a number of destination buffers to the video digitizer component and specifies the order in which those buffers are to be used. The digitizer component then fills the buffers, allowing you to switch between the buffers more quickly than your application otherwise could. In this manner, you can grab a video sequence at a higher rate with less chance of data loss. This technique can be used with both destination options.

You define the buffers to the digitizer by calling the VDSetupBuffers function. The VDGrabOneFrameAsync function starts the process of grabbing a single video frame. The VDDone function allows you to determine when the digitizer component has finished a given frame.

Obtaining an Accurate Time of Frame Capture

The sequence grabber typically gives video digitizers a time base so your application can obtain an accurate time for the capture of any given frame. Applications can set the digitizer’s time base by calling the VDSetTimeBase function.

Controlling Compressed Source Devices

Some video digitizer components may provide functions that allow applications to work with digitizing devices that can provide compressed image data directly. Such devices allow applications to retrieve compressed image data without using the Image Compression Manager. However, in order to display images from the compressed data stream, there must be an appropriate decompressor component available to decompress the image data.

Video digitizers that can support compressed source devices set the digiOutDoesCompress flag to 1 in their capability flags. See Capability Flags for more information about these flags.

Applications can use the VDGetCompressionTypes function to determine the image-compression capabilities of a video digitizer. The VDSetCompression function allows applications to set some parameters that govern image compression.

Applications control digitization by calling the VDCompressOneFrameAsync function, which instructs the video digitizer to create one frame of compressed image data. The VDCompressDone function returns that frame. When an application is done with a frame, it calls the VDReleaseCompressBuffer function to free the buffer. An application can force the digitizer to place a key frame into the sequence by calling the VDResetCompressSequence function. Applications can turn compression on and off by calling VDSetCompressionOnOff.

Applications can obtain the digitizer’s image description structure by calling the VDGetImageDescription function. Applications can set the digitizer’s time base by calling the VDSetTimeBase function.

All of the digitizing functions described in this section support only asynchronous digitization. That is, the video digitizer works independently to digitize each frame. Applications are free to perform other work while the digitizer works on each frame.

The video digitizer component manages its own buffer pool for use with these functions. In this respect, these functions differ from the other video digitizer functions that support asynchronous digitization. See Controlling Digitization for more information about these functions.

© 2005, 2007 Apple Inc. All Rights Reserved. (Last updated: 2007-01-08)