Types of Security Vulnerabilities

Most software security vulnerabilities fall into one of a small set of categories: buffer overflows, unvalidated input, race conditions, access-control problems, and weaknesses in authentication, authorization, or cryptographic practices. The nature of each of these types of vulnerability is described in this article. Other articles in this document go into more detail and provide specific advice, including sample code, to help you avoid these vulnerabilities. In addition, because the most unassailable locks in the world won't protect a building if someone leaves the door open, some consideration is given here and elsewhere in this document to application interfaces that help the user make informed choices that enhance security and reduce the risk of social engineering attacks.

Contents:

Buffer Overflows

Unvalidated Input

Race Conditions

Insecure File Operations

Access Control Problems

Secure Storage and Encryption

Social Engineering

Buffer Overflows

Books on software security invariably mention buffer overflows as a major source of vulnerabilities. Exact numbers are hard to come by, but as an indication, approximately 20% of the published exploits reported by the United States Computer Emergency Readiness Team (US-CERT) for 2004 involved buffer overflows. Buffer overflows can damage programs or compromise data, even when a program is running with ordinary privileges. Any programmer can inadvertently create buffer overflows in their code. For example, buffer overflows have been found in many open-source programs and in every major operating system. This section explains what a buffer overflow is and (in general terms) how it is exploited. See “Avoiding Buffer Overflows” for detailed information on how to find and avoid buffer overflows.

Any application or system software that has a user interface (whether graphical or command line) has to store the user's input, at least temporarily. For example, if an application displays a dialog requesting a filename, it has to store that filename at least long enough to pass it on to whatever function it calls to open or create a file with that name. In every computer operating system, including Mac OS X and iPhone OS, the computer's random access memory (RAM) is used to store such data. There are two ways in which the memory used for this purpose is commonly organized: for highly-efficient storage of limited amounts of data, the data is put into a region of memory known as the stack. In the stack, data is read (and automatically removed from memory) in the reverse order from which it was put in (last in-first out, or LIFO). For storage of larger amounts of data than can be kept in the stack, the data is put into a region known as the heap. Data can be read from the heap in any order and can be retained so that it can be read and modified any number of times.

Stack Overflows

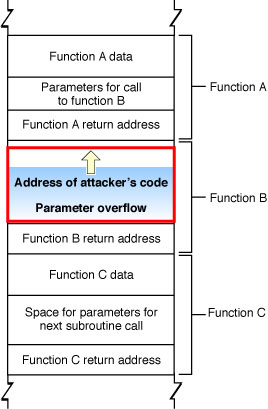

An example of data that is commonly put on the stack is the return address and parameter values used to call a function. The stack is used for this data because it is efficient and because nested function calls lend themselves well to a LIFO storage approach. That is, each function can call another function, which in turn can call another, and the first return address needed is that stored by the last function executed. For example, when function A calls function B, the address to which function B should return control when finished is put onto the stack along with all the parameter values needed by function B. When function B calls function C, the return address and parameter values for function C to use are put onto the stack. In this case, the last values put in—those needed by function C—are the first used, followed by those needed by function B, followed by those needed by function A. Figure 1 illustrates the organization of the stack. Note that this figure is schematic only; the actual content and order of data put on the stack depends on the architecture of the CPU being used. See Mac OS X ABI Function Call Guide for descriptions of the function-calling conventions used in all the architectures supported by Mac OS X.

Although a program should check the data input by the user to make sure it is appropriate for the purpose intended—for example, to make sure that a filename does not include illegal characters and does not exceed the legal length for filenames—frequently the programmer does not bother. The programmer assumes that the user will not do anything unreasonable. Unfortunately, if the user is malicious, he might type in data that is longer than the function parameter allows. Because the function reserves only a limited amount of space on the stack for this data, the result is that the data overwrites other data on the stack. As you can see from Figure 2, a clever attacker can use this technique to overwrite the return address used by the function, substituting the address of his own code. Then, when function C completes execution, rather than returning to function B, it jumps to the attacker's code. Because the user's program executes the attacker's code, the attacker's code inherits the user's permissions. If the user is logged on as an administrator (the default configuration in Mac OS X), the attacker can take complete control of the computer, reading data from the disk, sending emails, and so forth. (In iPhone OS, applications are much more restricted in their privileges and are unlikely to be able to take complete control of the device.)

Heap Overflows

Because the heap is used to store data but is not used to store the return address value of functions and methods, it is less obvious how an attacker can exploit a buffer overflow on the heap. To some extent, it is this nonobviousness that makes heap overflows an attractive target—programmers are less likely to worry about them and defend against them than they are for stack overflows.

There are two ways in which heap overflows are exploited: First, the attacker tries to find other data on the heap worth overwriting. Much of the data on the heap is generated internally by the program rather than copied from user input. For example, an attacker who hacks into a phone company’s billing system might be able to set a flag indicating that a bill has been paid. Or, by overwriting a temporarily stored user name, an attacker might be able to log into a program with administrator privileges. This is known as a non-control-data attack.

Second, objects allocated on the heap in many languages, such as C++ and Objective-C, include tables of function pointers. By exploiting a buffer overflow to change such a pointer, an attacker might be able to substitute their own data or to execute their own routine.

Exploiting a buffer overflow on the heap might be a complex, arcane problem to solve, but crackers thrive on just such challenges. For example:

A heap overflow in code for decoding a bitmap image allowed remote attackers to execute arbitrary code.

A heap overflow vulnerability in a networking server allowed an attacker to execute arbitrary code by sending an HTTP POST request with a negative “Content-Length” header.

[1CVE-2006-0006 2CVE-2005-3655]

Unvalidated Input

Any input received by your program from an untrusted source is a potential target for attack. In this context, an ordinary user is an untrusted source. Examples of input from an untrusted source include (but are not restricted to):

text input fields

audio, video, or graphics files provided by users or other processes and read by the program

command line input

Hackers look at every source of input to the program and attempt to pass in malformed data of every type they can imagine. If the program crashes or otherwise misbehaves, the hacker then tries to find a way to exploit the problem. Unvalidated-input exploits have been used to take control of operating systems, steal data, corrupt users’ disks, and more. One such exploit was used to “jail break” iPhones.

Types of input-validation vulnerabilities, and what to do about them, are discussed in “Validating Input.”

Race Conditions

A race condition exists when two events may occur out of sequence. If the correct sequence is required for the proper functioning of the program, this results in a bug. If an attacker can cause the correct sequence not to happen and take advantage of the situation to insert malicious code, change a filename, or otherwise interfere with the normal operation of the program, the race condition is a security vulnerability. Attackers can sometimes take advantage of small time gaps in the processing of code to interfere with the sequence of operations, which they then exploit.

Mac OS X, like all modern operating systems, is multitasking; that is, it assigns different chores to different processes, and the operating system switches among all the different processes to make the most efficient use of the processor and to allow two or more applications, and any number of background programs (servers or daemons) to appear to run simultaneously. (In fact, if the computer has two or more processors, more than one process can truly be running simultaneously.) The advantages to the user are many and mostly obvious; the disadvantage, however, is that there is no guarantee that two sequential operations will be performed without any other operations being performed in between. In fact, when two processes are using the same resource (such as the same file), there is no guarantee that they will access that resource in any particular order.

For example, if you were to open a file and then read from it, even though your application did nothing else between these two operations, some other process might have control of the computer after the file was opened and before it was read. If two different processes (in the same or different applications) were writing to the same file, there would be no way to know which one would write first and which would overwrite the data written by the other. Such situations open security vulnerabilities.

There are two basic types of race condition that can be exploited: time of check–time of use (TOCTOU), and interprocess communication.

Time of Check–Time of Use

Commonly, an application checks some condition before undertaking an action. For example, it might check to see if a file exists before writing to it, or whether the user has access rights to read a file before opening it for reading. Because there is a time gap between the check and the use (even though it might be a fraction of a second), an attacker can sometimes use that gap to mount an attack.

A classic example is the case where an application checks for the existence of a temporary file before writing data to it. If such a file exists, the application deletes it or chooses a new name for the temporary file to avoid conflict. If the file does not exist, the application opens the file for writing, because the system routine that opens a file for writing automatically creates a new file if none exists. An attacker, by continuously running a program that creates a new temporary file with the appropriate name, can (with a little persistence and some luck) create the file in the gap between when the application checked to make sure the temporary file didn't exist and when it opens it for writing. The application then opens the attacker's file and writes to it (remember, the system routine opens an existing file if there is one, and creates a new file only if there is no existing file). The attacker's file might have different access permissions than the application's temporary file, so the attacker can then read the contents. Or, the attacker can write a file that is a symbolic link to some other file, either one owned by the attacker or an existing system file. In one version of this attack, the file chosen is the system password file, so that after the attack, the system passwords have been corrupted to the point that no one, even the system administrator, can log on.

Interprocess Communication

Separate processes—either within a single program or in two different programs—sometimes have to share information. Common methods include using shared memory or using some messaging protocol, such as Sockets, provided by the operating system.

Some messaging protocols used for interprocess communication are also vulnerable to attack. A common programming error, for example, is to make non-atomic function calls from within a signal handler. A signal, in this context, is a message sent from one process to another in a UNIX-based operating system (such as Mac OS X). Any program that needs to receive signals contains code called a signal handler. A non-atomic function is one that does not necessarily complete operation before being interrupted by the operating system (which might switch to another process before returning to complete execution of the function call). There are very few operations that are safe for a signal handler to do, and most function calls are not among them. The reason is that an attacker can create a race condition by running a routine that operates on the same files or memory locations as the non-atomic function calls in the signal handler. Because the operating system switches rapidly among all the processes running simultaneously on the system, it is possible for the attacker's code to run after the signal handler has opened the file or memory location for writing or reading, but before the signal handler has completed running. Then the actual value read or stored in that location has been set by the attacker, not by the signal handler.

At a minimum, such a signal handler race condition can be used to disrupt the operation of a program. However, in certain circumstances, an attacker can make the operation of the system unpredictable, or cause the attacker's own code to be executed to wreak even more havoc.

A race condition of this sort in open-source code present in many UNIX-based operating systems was reported in 2004 that made it possible for a remote attacker to execute arbitrary code or to stop FTP from working. [CVE-2004-0794]

For more information on race conditions and how to avoid them, see “Avoiding Race Conditions and Insecure File Operations.”

Insecure File Operations

In addition to time of check–time of use problems, many other file operations are insecure. Programmers often make assumptions about the ownership, location, or attributes of a file that might not be true. For example, you might assume that you can always write to a file created by your program. However, if an attacker can change the permissions or flags on that file after you create it, and if you fail to check the result code after a write application, you will not detect the fact that the file’s been tampered with.

Examples of insecure file operations include:

writing to or reading from a file in a location writable by another user

failing to make the right checks for file type, device ID, links, and other settings before using a file

failing to check the result code after a file operation

assuming that if a file has a local pathname, it has to be a local file

These and other insecure file operations are discussed in more detail in “Insecure File Operations.”

Access Control Problems

Access control is the process of controlling who is allowed to do what. This ranges from controlling physical access to a computer—keeping your servers in a locked room, for example—to specifying who has access to a resource (a file, for example) and what they are allowed to do with that resource (such as read only). Some access control mechanisms are enforced by the operating system, some by the individual application or server, some by a service (such as a networking protocol) in use. Many security vulnerabilities are created by the careless or improper use of access controls, or by the failure to use them at all.

Much of the discussion of security vulnerabilities in the software security literature is in terms of privileges, and many exploits involve an attacker somehow gaining more privileges than they ought to have. Privileges, also called permissions, are access rights granted by the operating system, controlling who is allowed to read and write files, directories, and attributes of files and directories (such as the permissions for a file), who can execute a program, and who can perform other restricted operations such as accessing hardware devices and making changes to the network configuration. Permissions and access control in Mac OS X are discussed in Security Overview.

Of particular interest to attackers is the gaining of root privileges, which refers to having the unrestricted permission to perform any operation on the system. An application running with root privileges can access everything and change anything. Many security vulnerabilities involve programming errors that allow an attacker to obtain root privileges. Some such exploits involve taking advantage of buffer overflows or race conditions, which in some special circumstances allow an attacker to escalate their privileges. Others involve having access to system files that should be restricted or finding a weakness in a program—such as an application installer—that is already running with root privileges. For this reason, it’s important to always run programs with as few privileges as possible. Similarly, when it is necessary to run a program with elevated privileges, you should do so for as short a time as possible.

Much access control is enforced by applications, which can require a user to authenticate before granting authorization to perform an operation. Authentication can involve requesting a user name and password, the use of a smart card, a biometric scan, or some other method. If an application calls the Mac OS X Authorization Services application interface to authenticate a user, it can automatically take advantage of whichever authentication method is available on the user's system. Writing your own authentication code is a less secure alternative, as it might afford an attacker the opportunity to take advantage of bugs in your code to bypass your authentication mechanism, or it might offer a less secure authentication method than the standard one used on the system.

Digital certificates are commonly used—especially over the Internet and with email—to authenticate users and servers, to encrypt communications, and to digitally sign data to ensure that it has not been corrupted and was truly created by the entity that the user believes to have created it. Incorrect or careless use of digital certificates can lead to security vulnerabilities. For example, a server administration program shipped with a standard self-signed certificate, with the intention that the system administrator would replace it with a unique certificate. However, many system administrators failed to take this step, with the result that an attacker could decrypt communication with the server. [CVE-2004-0927]

It's worth noting that nearly all access controls can be overcome by an attacker who has physical access to a machine and plenty of time. For example, no matter what you set a file's permissions to, the operating system cannot prevent someone from bypassing the operating system and reading the data directly off the disk. Only restricting access to the machine itself and the use of robust encryption techniques can protect data from being read or corrupted under all circumstances.

The use of access controls in your program is discussed in more detail in “Elevating Privileges Safely.”

Secure Storage and Encryption

Encryption can be used to protect a user's secrets from others, either during data transmission or when the data is stored. (The problem of how to protect a vendor's data from being copied or used without permission is not addressed here.) Mac OS X provides a variety of encryption-based security options, such as

FileVault

the ability to create encrypted disk images

keychain

certificate-based digital signatures

encryption of email

SSL/TLS secure network communication

Kerberos authentication

The list of security options in iPhone OS includes

personal identification number (PIN) to prevent unauthorized use of the device

data encryption

the ability to add a digital signature to a block of data

keychain

SSL/TLS secure network communication

Each service has appropriate uses and each has limitations. For example, FileVault, which encrypts the contents of a user's home directory, is a very important security feature for shared computers or computers to which attackers might gain physical access, such as laptops. However, it is not very helpful for computers that are physically secure but that might be attacked over the network while in use, because in that case the home directory is in an unencrypted state and the threat is from insecure networks or shared files. Also, FileVault is only as secure as the password chosen by the user—if the user selects an easily guessed password, or writes it down in an easily found location, the encryption is useless.

It is a serious mistake to try to create your own encryption method or to implement a published encryption algorithm yourself unless you are already an expert in the field. It is extremely difficult to write secure, robust encryption code that generates unbreakable ciphertext, and it is almost always a security vulnerability to try. For Mac OS X, if you need cryptographic services beyond those provided by the Mac OS X user interface and high-level programming interfaces, you can use the open-source CSSM Cryptographic Services Manager. See the documentation provided with the Open Source security code, which you can download at http://developer.apple.com/darwin/projects/security/. For iPhone OS, the development APIs should provide all the services you need.

For more information about Mac OS X and iPhone OS security features, see “Mac OS X and iPhone OS Security Services.”

Social Engineering

Often the weakest link in the chain of security features protecting a user's data and software is the user himself. As buffer overflows, race conditions, and other security vulnerabilities are eliminated from software, attackers increasingly concentrate on fooling users into executing malicious code or handing over passwords, credit-card numbers, and other private information. Tricking a user into giving up secrets or into giving access to a computer to an attacker is known as social engineering.

In February of 2005, a large firm that maintains credit information, Social Security numbers, and other personal information on virtually all U.S. citizens revealed that they had divulged information on at least 150,000 people to scam artists who had posed as legitimate businessmen. According to Gartner (www.gartner.com), phishing attacks cost U.S. banks and credit card companies about $1.2 billion in 2003, and this number is increasing. They estimate that between May 2004 and May 2005, approximately 1.2 million computer users in the United States suffered losses caused by phishing.

Software developers can counter such attacks in two ways: through educating their users, and through clear and well-designed user interfaces that give users the information they need to make informed decisions. For example, the Unicode character set includes many characters that look similar or identical to common English letters—the Russian glyph that is pronounced like "r" looks exactly like an English "p" in many fonts, though it has a different Unicode value. When web browsers began to support international domain names (IDN), some phishers set up websites that look identical to legitimate ones, using Unicode look-alike characters (referred to as homographs) in their web addresses to fool users into thinking the URL was correct. To foil this attack, recent versions of Safari maintain a list of scripts that can be mixed in domain names. When a URL contains characters in two or more scripts that are not allowed in the same URL, Safari substitutes an ASCII format called "Punycode." For example, an impostor website with the URL http://www.apple.com/ that uses a Roman script for all the characters except for the letter "a", for which it uses a Cyrillic character, is displayed as http://www.xn--pple-43d.com.

For more advice on how to write a user interface that enhances security, see “Application Interfaces That Enhance Security.”

© 2008 Apple Inc. All Rights Reserved. (Last updated: 2008-05-23)